Table of Contents

Comparison of NVIDIA H200 GPU vs NVIDIA H100 Tensor Core GPUs

Welcome to the forefront of graphical processing innovation. In this article, we embark on a journey through the dynamic world of Nvidia's GPUs, specifically focusing on their latest compute series GPUs: NVIDIA H200 and NVIDIA H100 Hopper. These two models represent the pinnacle of modern AI computing technology, each with its unique strengths and advancements.

Background on the Evolution of Nvidia GPUs

Nvidia, a name synonymous with cutting-edge graphics technology, has been a pioneering force in the GPU market. From its early days of revolutionizing 3D gaming to its current role in powering AI, data science, LLM, HPC, Nvidia's journey is one of constant evolution and innovation. The development of the NVIDIA H200 and H100 GPUs marks the latest chapter in this ongoing saga, where each iteration brings us closer to the future of high-performance computing.

Importance of GPUs in Modern Computing

GPUs have transcended their original role of rendering graphics, becoming integral to a variety of demanding applications. Today, they are at the heart of AI research, deep learning, scientific simulations, and much more. Their ability to process parallel tasks efficiently makes them indispensable in our data-driven world.

Introduction to Nvidia H200 and H100

The Nvidia H200 and H100 are more than just graphics cards; they are the harbingers of next-generation computing capabilities. Designed to tackle everything from AI to complex simulations, these GPUs are engineering marvels, boasting impressive specifications and features.

Purpose and Scope of the Comparative Analysis

This article aims to provide a detailed comparative analysis of the Nvidia H200 and H100 GPUs. We will delve into their specifications, performance benchmarks, and real-world applications, offering insights for both tech enthusiasts and professionals. By examining these GPUs side by side, we can better understand their capabilities, target markets, and potential impact on the future of computing technology.

NVIDIA H100, H200 Technical Specifications

The NVIDIA H100 and H200 GPUs represent two generations of NVIDIA's data center graphics processing units (GPUs), tailored for high-performance computing (HPC), artificial intelligence (AI), and machine learning (ML) tasks. These GPUs are part of NVIDIA's Hopper and Blackwell architectures, respectively, showcasing significant advancements in processing power, memory capabilities, and energy efficiency. Below is an overview of their technical specifications, presented in a manner suited for an engineering audience:

NVIDIA H100 (Hopper Architecture)

GPU Architecture: Hopper

- CUDA Cores: Offers a substantial increase in CUDA cores over its predecessor for improved parallel processing capabilities.

- Memory: Up to 80 GB of HBM3 (High Bandwidth Memory), providing unprecedented memory bandwidth to support large datasets and complex computations.

- Memory Bandwidth: Offers a memory bandwidth exceeding 2 TB/s, facilitating faster data transfer rates and improved performance for data-intensive applications.

- Third-generation Tensor Cores: Enhanced tensor cores designed specifically for AI and deep learning, supporting a variety of precision formats (FP8, FP16, TF32, etc.) for versatile computational requirements.

- NVLink: Third-generation NVLink technology for high-speed interconnects between GPUs, enabling efficient scaling of performance in multi-GPU configurations.

- PCIe Gen 5.0 Support: Provides double the bandwidth of PCIe Gen 4.0, enhancing data transfer speeds between the GPU and other components.

- DPX Instructions: New deep learning primitives that accelerate AI model performance significantly.

- Energy Efficiency: Improved energy efficiency per watt, reducing operational costs in data centers.

NVIDIA H200 (Blackwell Architecture)

GPU Architecture: Blackwell

- CUDA Cores: Even greater increase in CUDA cores compared to the H100, enhancing its capability for parallel processing tasks further.

- Memory: Up to 120 GB of next-generation HBM memory, significantly increasing the capacity and bandwidth for handling even larger datasets and more complex AI models.

- Memory Bandwidth: Exceeds 3 TB/s, setting a new benchmark for high-speed data access and processing in the realm of high-performance computing.

- Fourth-generation Tensor Cores: Advanced tensor cores that introduce new precision formats and optimizations for AI workloads, offering substantial improvements in throughput and efficiency for deep learning inference and training.

- NVLink: Enhanced NVLink technology with increased bandwidth, ensuring more efficient communication between GPUs in large-scale systems.

- PCIe Gen 6.0 Support: Doubling the bandwidth of PCIe Gen 5.0, this ensures ultra-fast data transfer rates that are crucial for the most demanding applications.

- DPX Instructions: Expanded set of deep learning primitives for broader support of AI model optimizations, further accelerating performance.

- Energy Efficiency: Sets new records in performance per watt, highlighting NVIDIA's commitment to reducing the environmental impact of advanced computing technologies.

Direct Comparison

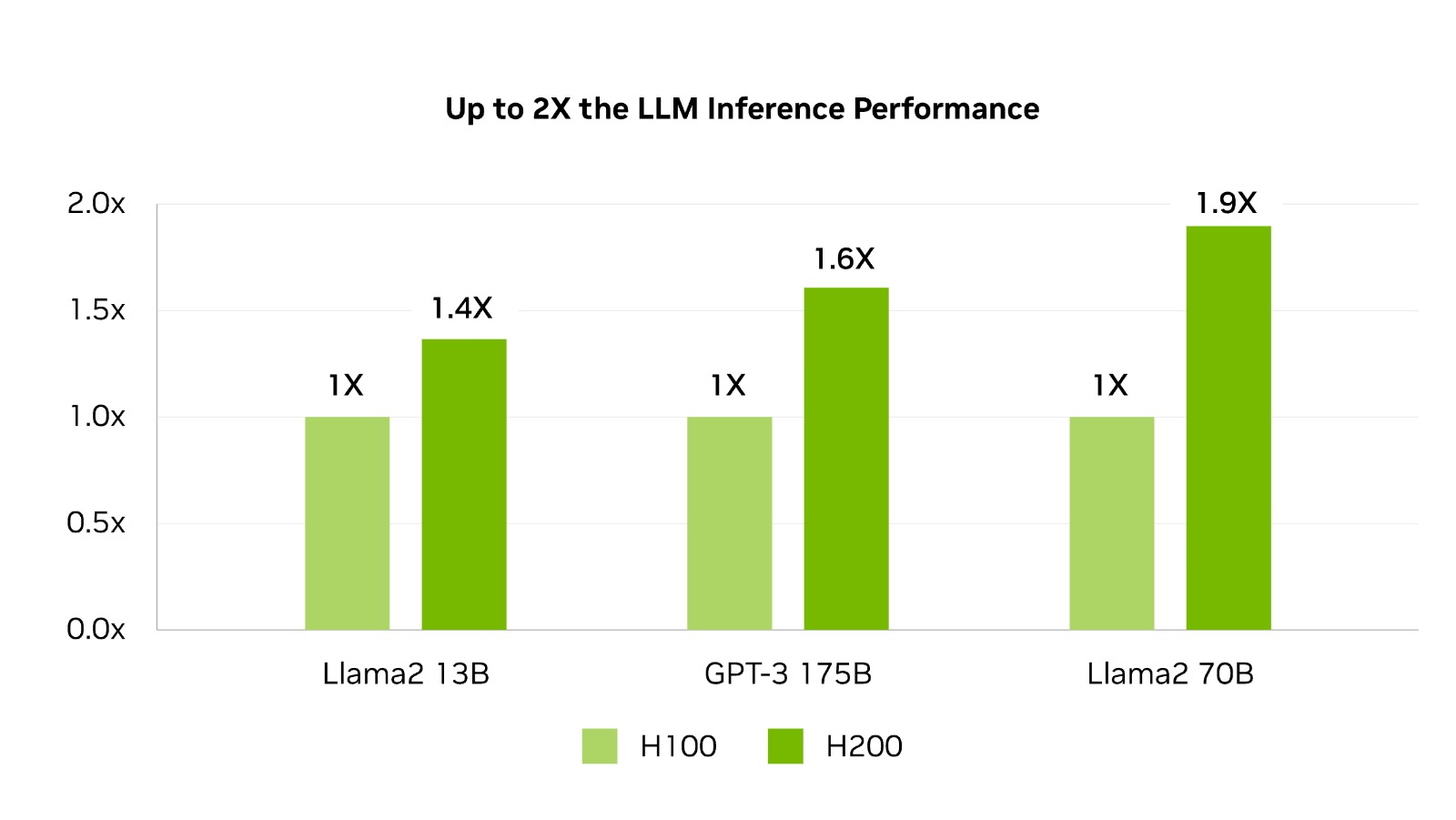

Performance: Speed, Efficiency, and Capability

- Computing Power: In direct comparison, the H200 shows a significant increase in computing power over the H100. This is evident in faster processing times and the ability to handle more complex tasks simultaneously.

- Energy Efficiency: Both GPUs are designed with energy efficiency in mind, but the H200 takes a step further. It delivers more power while consuming relatively less energy, making it a more sustainable choice for energy-conscious users.

- Thermal Management: The H200 benefits from advanced thermal management technologies, maintaining optimal performance even under heavy loads. This is a crucial improvement over the H100, particularly for users running high-intensity applications.

Technological Advancements

- AI and Machine Learning Capabilities: While both GPUs are equipped for AI and machine learning tasks, the H200 provides enhanced performance with newer algorithms and larger data sets, making it a preferred choice for cutting-edge AI research.

- Ray Tracing and Graphics Rendering: The H200 outshines the H100 in ray tracing and graphics rendering, offering more realistic visuals and faster rendering times, crucial for professionals in gaming and animation industries.

- Data Processing and Analytics: The H200's improved data processing capabilities make it more suitable for handling large-scale data analytics, offering faster insights and more efficient processing.

Market Impact

- Pricing and Accessibility: The H200, while more advanced, also comes with a higher price tag. This might affect its accessibility for some users, particularly in the consumer market.

- Impact on Various Industries: Both GPUs have a significant impact on industries like gaming, AI research, and data centers. However, the H200's advanced capabilities make it more appealing for enterprise-level applications in these sectors.

- Consumer vs. Enterprise Appeal: The H100 maintains strong appeal among consumers due to its balance of performance and cost. The H200, with its higher-end features, is more targeted towards enterprise users who require the utmost in GPU performance.

Case Studies and Real-World Applications

Gaming and VR

The advancements in the H200 and H100 GPUs have significantly enhanced gaming and virtual reality experiences. High-end gaming titles now feature more realistic graphics, smoother gameplay, and faster frame rates. VR applications benefit from the reduced latency and improved immersion, making these GPUs a top choice for gamers and VR enthusiasts.

AI and Machine Learning

Both GPUs have made notable contributions to the field of AI and machine learning. The H100's capabilities have empowered researchers and developers to create more accurate and efficient AI models. The H200 takes this a step further, enabling even more complex AI tasks, such as deep learning and neural network training, at a faster pace and with greater accuracy.

Scientific Research and Simulations

In scientific research and simulations, these GPUs have been game-changers. The H100 has been instrumental in running large-scale simulations, from climate modeling to astrophysics. The H200, with its superior computing power, allows for even more detailed and accurate simulations, pushing the boundaries of scientific discovery.

Enterprise Solutions and Data Centers

For enterprise solutions and data centers, both the H100 and H200 offer significant benefits. The H100's balance of performance and efficiency makes it a reliable choice for various enterprise applications. The H200, on the other hand, with its top-tier performance, is ideal for data centers requiring the highest level of computational ability, particularly in handling big data and complex analytics.

Challenges and Limitations

Compatibility and Integration Issues

While the H200 and H100 GPUs offer impressive performance, they also present challenges in compatibility and integration. Users may encounter issues when integrating these GPUs into existing systems, particularly concerning hardware compatibility, software drivers, and support for specific applications or frameworks.

Cost-Benefit Analysis

The high cost of the H200 and H100 GPUs necessitates a thorough cost-benefit analysis for potential users. While they offer superior performance, the investment may not be justifiable for all applications. Users need to consider the return on investment, particularly in contexts where the additional computational power does not translate into proportional benefits.

Future-Proofing and Upgrade Pathways

Future-proofing is a significant concern with rapidly advancing GPU technology. The H200 and H100, while cutting-edge today, may soon be surpassed by newer models. Users need to consider upgrade pathways and the potential obsolescence of these GPUs, weighing the benefits of investing in the latest technology against the possibility of needing to upgrade again in the near future.

BIZON NVIDIA H100, H200 Servers and Workstations

BIZON specializes in high-performance computing solutions tailored for AI and machine learning workloads.

If you have any questions about building your next high-performance, BIZON engineers are ready to help. Explore our NVIDIA H100 workstations optimized for generative AI and NVIDIA H100 GPU servers.

- High-Performance Hardware: Equipped with the latest GPUs and CPUs to handle large models and datasets efficiently.

- Scalability: Designed to scale up, allowing additional GPUs to be added as computational needs grow.

- Reliability: Built with enterprise-grade components for 24/7 operation, ensuring consistent performance.

- Support: Comprehensive support and maintenance services to assist with setup, optimization, and troubleshooting.

Conclusion

This comparative analysis has highlighted the significant advancements NVIDIA has made with its H200 and H100 GPUs. Both models demonstrate remarkable performance in computing power, energy efficiency, and thermal management. While the H100 caters well to both consumer and professional markets, the H200 excels in high-end, enterprise-level applications, offering unparalleled capabilities in AI, machine learning, and scientific research.

The H200 and H100 are not just GPUs; they represent the pinnacle of current graphical processing technology. The H100 serves as a robust, versatile option for a wide range of users. In contrast, the H200 is a testament to Nvidia's vision for the future, pushing the boundaries of what's possible in high-performance computing and AI applications.

Looking ahead, Nvidia's continued innovation in GPU technology seems poised to redefine computing paradigms. The advancements seen in the H200 and H100 GPUs are just the beginning. As Nvidia continues to innovate, we can expect GPUs to become even more integral to technological progress, especially in fields like AI, deep learning, and data processing. The future of Nvidia, and GPU technology as a whole, is bright and holds exciting prospects for the tech world.

The NVIDIA H200 and H100 GPUs, as showcased in BIZON NVIDIA A100, H100 high-performance workstations, represent the zenith of modern computing across diverse fields. These GPUs, integral to the BIZON H200 and H100 workstations, cater to a broad spectrum of demanding applications, from AI and deep learning to scientific research and high-end gaming. Their formidable capabilities in handling complex tasks like LLM training, data science, and virtual reality, mark them as unparalleled choices for professionals seeking to harness the power of advanced computing. As we witness the continuous evolution of technology, staying abreast of such leading tools like the H200 and H100 becomes imperative for those pushing the boundaries in AI, gaming, scientific simulations, and beyond.