Table of Contents

- NVIDIA RTX 5090 vs. RTX 4090

- GPU Specifications and Pricing

- Benchmark Data and Performance Analysis

- Visual Data: Charts and Graphs

- Price Analysis and Cost Efficiency

- In-Depth Analysis of Core Improvements

- Real-World Performance and Use Cases

- Technical Considerations and Best Practices

- Price-to-Performance Ratio: A Balanced Perspective

- Conclusion: The Future of Professional GPUs

- Conclusion and Final Thoughts

NVIDIA RTX 5090 vs. RTX 4090 – Comparison, benchmarks for AI, LLM Workloads

NVIDIA RTX 5090 vs. RTX 4090

In the rapidly evolving world of artificial intelligence and deep learning, hardware choices can significantly influence both research outcomes and operational efficiency. With sophisticated AI models becoming increasingly complex, the selection of a high-performance GPU is more critical than ever. This article provides a comprehensive, objective comparison between two leading GPUs—the NVIDIA RTX 5090 and the RTX 4090—focusing on their technical specifications, real-world AI benchmarks and various LLMs performance, and price-to-performance ratios.

Whether you are a researcher, data scientist, or IT professional, this analysis is designed to provide you with the detailed information necessary to make an informed decision. By examining key performance metrics and comparing real-world test data, we aim to present a balanced view that allows you to evaluate which GPU best meets your needs.

In this article, you will learn about:

- The detailed technical specifications and pricing of the NVIDIA RTX 5090 and RTX 4090.

- Benchmark performance results using various AI workload tests (LLM).

- Charts and graphs that visualize performance, core improvements, and overall efficiency.

- A thorough price analysis and discussion of cost efficiency.

- Insights into how these GPUs perform in real-world scenarios and what that might mean for your projects.

GPU Specifications and Pricing

Before diving into the performance comparisons, it is essential to review the hardware specifications and pricing details of the two GPUs under discussion. Both the RTX 5090 and RTX 4090 are designed for high-performance computing, but differences in their core configurations and memory capacities set them apart.

NVIDIA RTX 5090 32GB

- CUDA Cores: 21,760

- Tensor Cores: 680

- VRAM: 32GB

- Price: $2,600

NVIDIA RTX 5090 is positioned as a cutting-edge GPU for demanding AI workloads and LLMs. With a substantial increase in both CUDA and Tensor cores compared to previous models, it offers enhanced parallel processing capabilities. The 32GB of VRAM is particularly advantageous for users who work with large-scale AI models, ensuring that memory limitations do not bottleneck performance.

NVIDIA RTX 4090 24GB

- CUDA Cores: 16,384

- Tensor Cores: 512

- VRAM: 24GB

- Price: $1,800

The RTX 4090 has long been recognized for its robust performance in various high-end applications. Featuring 16,384 CUDA cores and 512 Tensor cores, along with 24GB of VRAM, it continues to deliver strong performance at a more accessible price point. Its balanced configuration makes it suitable for a wide range of AI tasks, from deep learning training to real-time inference.

Benchmark Data and Performance Analysis

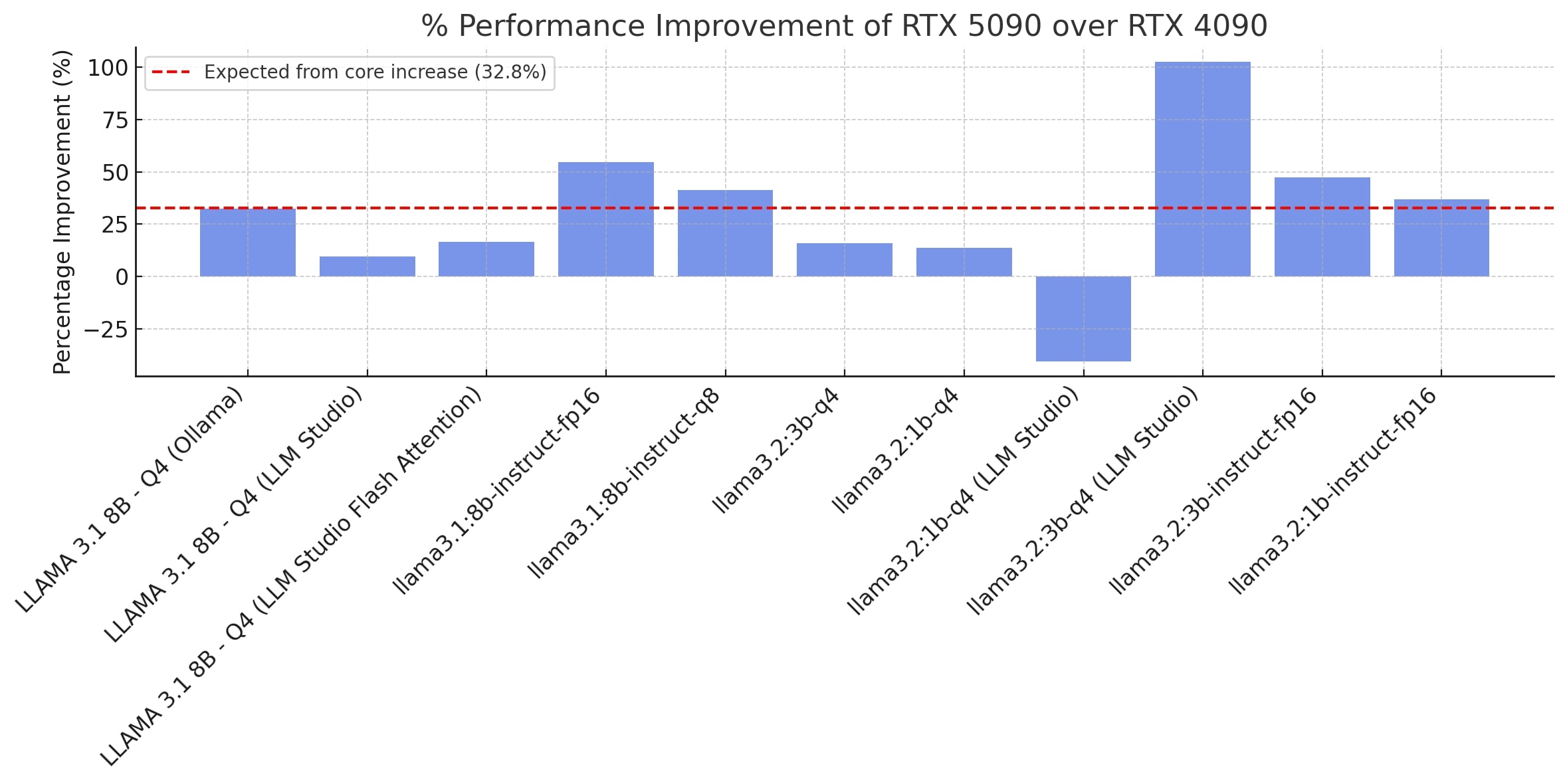

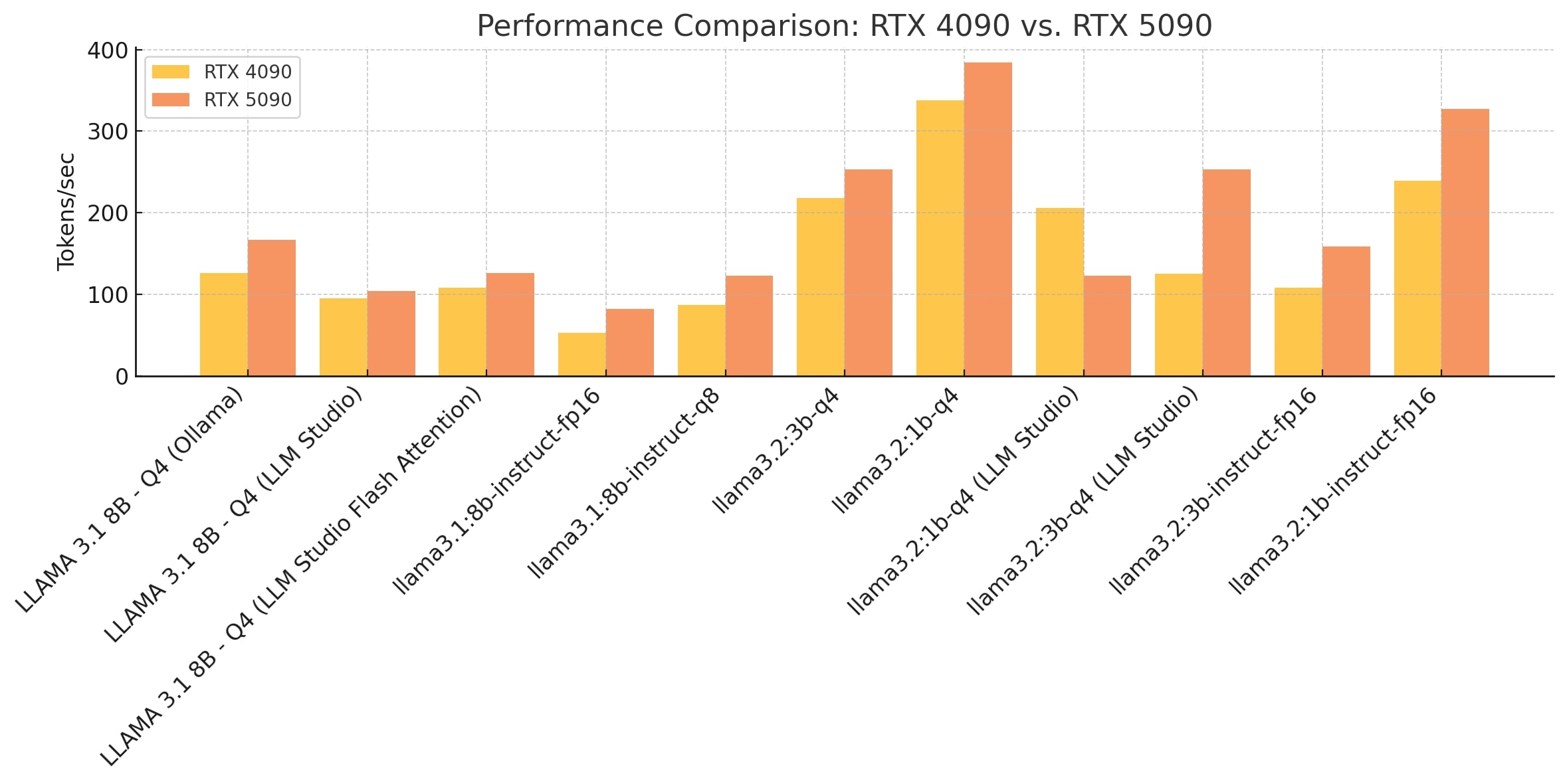

To assess the performance differences between the RTX 5090 and RTX 4090, a series of benchmark tests were conducted using various configurations of the LLaMA models. The tests measured performance in tokens per second (tok/sec) across several scenarios, providing a clear picture of how each GPU handles different workloads.

| Model/Test | RTX 5090 (tok/sec) | RTX 4090 (tok/sec) | % Improvement (5090 over 4090) |

|---|---|---|---|

| LLAMA 3.1 8B - Q4 (Test A) | 167 | 126 | 32.5% |

| LLAMA 3.1 8B - Q4 (Test B) | 104 | 95 | 9.5% |

| LLAMA 3.1 8B - Q4 (Test C) | 126 | 108 | 16.7% |

| LLAMA 3.1 8B - Instruct (FP16) | 82 | 53 | 54.7% |

| LLAMA 3.1 8B - Instruct (Q8) | 123 | 87 | 41.4% |

| LLAMA 3.2 3B - Q4 | 253 | 218 | 16.1% |

| LLAMA 3.2 1B - Q4 | 384 | 338 | 13.6% |

| LLAMA 3.2 3B - Q4 (Alternate) | 253 | 125 | 102.4% |

| LLAMA 3.2 3B - Instruct (FP16) | 159 | 108 | 47.2% |

| LLAMA 3.2 1B - Instruct (FP16) | 327 | 239 | 36.8% |

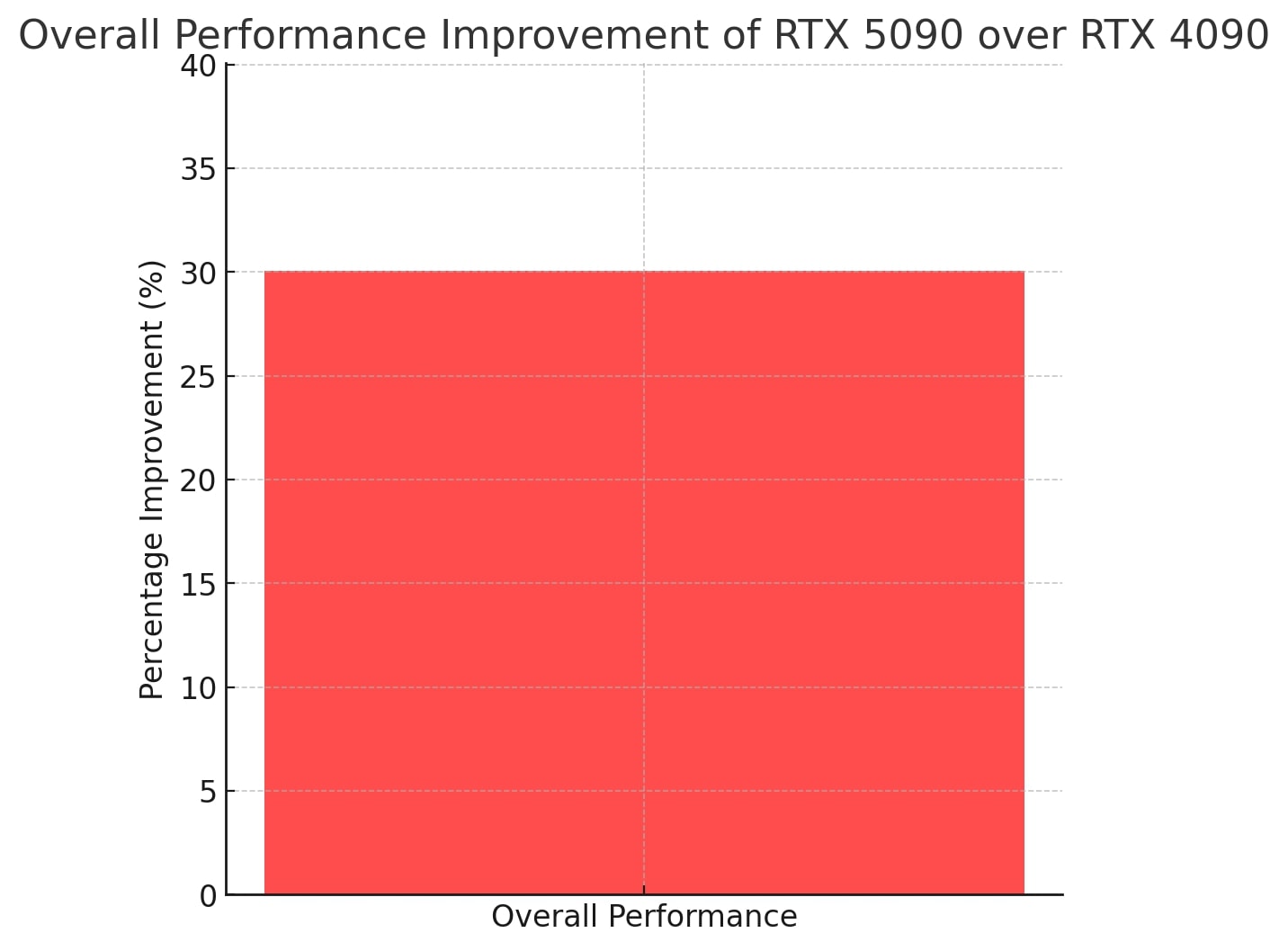

It is important to note that some test cases show variations due to differences in software optimization and workload conditions. However, on average, the RTX 5090 shows a performance improvement of approximately 35% over the RTX 4090 in these benchmarks.

Visual Data: Charts and Graphs

To help visualize these performance comparisons, the following charts have been prepared. Each chart highlights a different aspect of the RTX 5090 vs. RTX 4090 comparison. Replace the src paths below with your actual image URLs or file paths.

Performance Comparison Graph

First overall performance chart emphasizing relative gains in different model configurations.

Overall Performance Chart #1

Overall performance chart showing average speed-ups across multiple tests.

Overall Performance Chart #2

A side-by-side performance comparison chart highlighting tokens-per-second results.

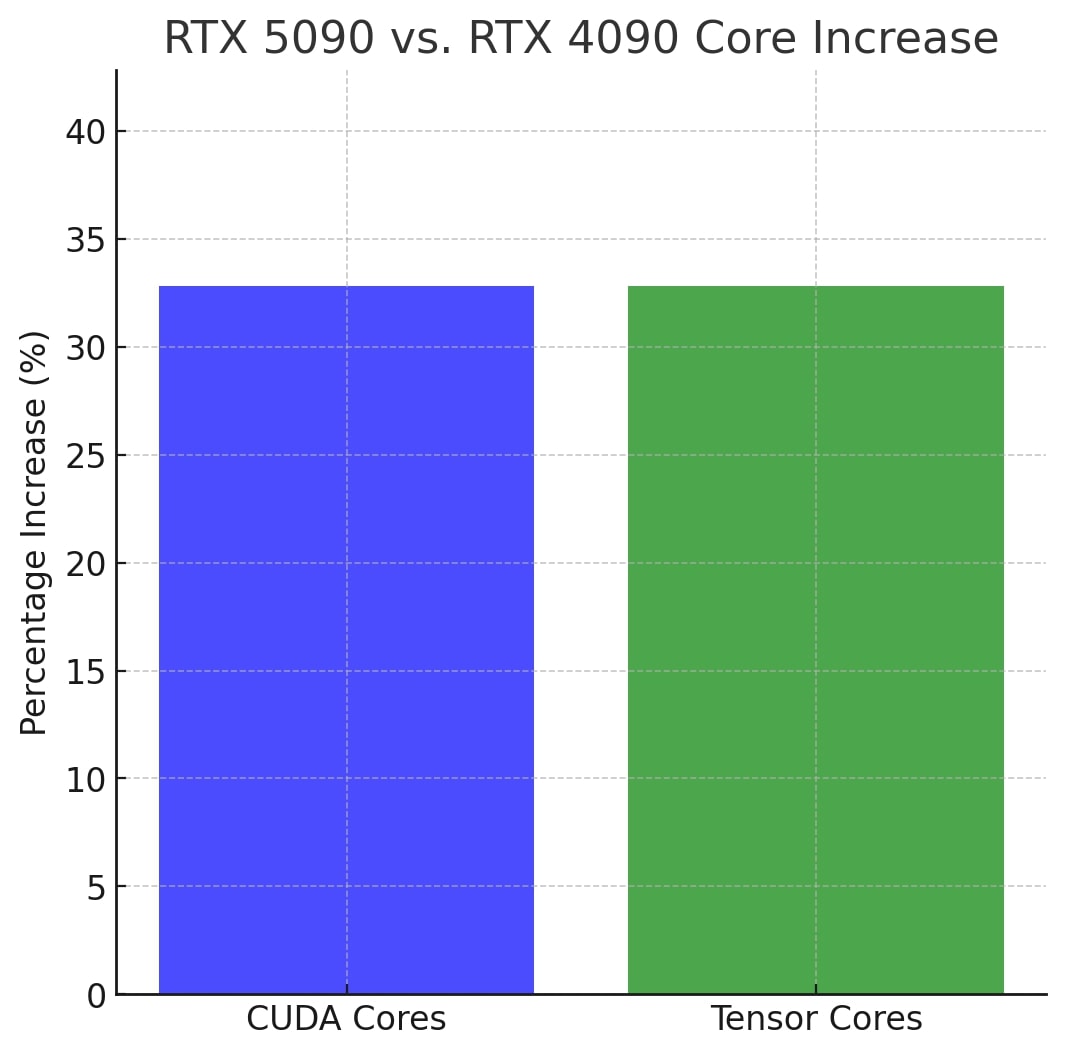

Core Increase Graph

This graph illustrates the increase in CUDA and Tensor cores from the RTX 4090 to the RTX 5090.

Price Analysis and Cost Efficiency

Price is a critical factor in hardware selection. The RTX 4090 is priced at $1,800, while the RTX 5090 is available for $2,600. This reflects a price increase of approximately 44.44%. Although the NVIDIA RTX 5090 offers an average performance improvement of about 35%, its higher cost means that the overall cost per unit of performance is an important consideration.

For users whose workloads are adequately handled by the RTX 4090, the lower price point may offer a more attractive balance between cost and performance. Conversely, those with needs for higher memory capacity and enhanced processing power might find the RTX 5090 better suited for their applications.

In-Depth Analysis of Core Improvements

One of the notable differences between the two GPUs is the increase in core counts. The RTX 5090 is equipped with 21,760 CUDA cores and 680 Tensor cores, while the RTX 4090 has 16,384 CUDA cores and 512 Tensor cores. This amounts to an increase of roughly 32.8% in both CUDA and Tensor cores.

In theory, this should translate into a proportional performance boost. In practice, however, factors such as clock speeds, memory bandwidth, and software optimizations also play significant roles in determining overall performance. The benchmark data suggests that while some scenarios match or exceed the theoretical improvement, others show a more modest increase. Understanding these nuances can help you evaluate whether the additional hardware resources meet your specific performance requirements.

It is essential to view these figures as one part of a broader performance picture. The extra cores in the RTX 5090 facilitate enhanced parallel processing, which can lead to faster computations in data-intensive applications and large-scale model training. This additional capacity is especially beneficial for tasks that involve real-time data processing or complex simulations.

Real-World Performance and Use Cases

While benchmark tests provide valuable insights, real-world performance is ultimately what matters. The RTX 5090 and RTX 4090 are deployed in a variety of applications, from deep learning research and AI model training to complex simulations and data analytics.

For example, in environments where processing speed is critical, such as real-time inference or high-throughput data analysis, the extra performance offered by the RTX 5090 can reduce latency and improve overall system responsiveness. On the other hand, in scenarios where the existing capacity of the RTX 4090 is sufficient, the cost savings may outweigh the marginal performance gains.

Many organizations perform their own evaluations based on workload-specific benchmarks. It is advisable to consider the nature of your tasks, the size of your datasets, and your performance requirements when deciding which GPU best fits your needs.

Technical Considerations and Best Practices

When selecting a GPU for AI workloads, it is important to consider the entire ecosystem. Performance is influenced not only by core counts and VRAM but also by system configuration, cooling solutions, power delivery, and software optimizations. Ensuring that your system is balanced and that components work harmoniously together can maximize the benefits of a high-performance GPU.

Some best practices include:

- Optimizing system configurations and driver settings for your specific workloads.

- Regularly benchmarking and monitoring performance to identify potential bottlenecks.

- Considering future scalability by choosing hardware that can support growing computational demands.

- Evaluating the entire cost of ownership, including energy consumption and maintenance requirements.

By taking a holistic approach, you can ensure that your hardware investment delivers both immediate benefits and long-term value.

Price-to-Performance Ratio: A Balanced Perspective

The price-to-performance ratio is a key metric for anyone evaluating high-end hardware. With the RTX 4090 at $1,800 and the RTX 5090 at $2,600, the percentage increase in price is around 44.44%, while the average performance gain is about 35%. This discrepancy highlights the importance of assessing not just the raw performance numbers but also the value proposition.

An informed decision should weigh both the technical merits and the financial implications. For many users, the RTX 4090 may offer sufficient performance at a lower cost, while the RTX 5090 might be more attractive for projects that demand higher computational capacity and memory resources.

Conclusion: The Future of Professional GPUs

The NVIDIA RTX PRO Series GPUs, particularly the RTX PRO 6000, represent a significant leap forward in professional graphics processing. With their advanced architecture, expansive VRAM capacity, and superior performance benchmarks, these GPUs are poised to redefine standards across various industries. As professionals continue to demand more from their hardware, the RTX PRO Series stands ready to deliver exceptional performance and efficiency, making it a compelling choice for those looking to push boundaries in their respective fields.

At Bizon, we leverage these cutting-edge GPUs in our custom workstation and server solutions, ensuring that creative professionals, researchers, and innovators alike can harness the full potential of this technology. By integrating the RTX PRO Series into our robust hardware offerings, Bizon empowers businesses to handle complex workloads, accelerate project timelines, and remain at the forefront of digital transformation. As the tech landscape continues to evolve, forward-thinking organizations should embrace this new generation of GPUs—together with Bizon’s expertise—to drive innovation and secure a competitive edge in an increasingly digital world.

Conclusion and Final Thoughts

In summary, the NVIDIA RTX 5090 and RTX 4090 represent two powerful options for modern AI workloads. The RTX 5090 provides a notable performance boost with its enhanced core counts and increased VRAM, making it well-suited for the most demanding tasks. However, this improvement comes at a higher price, which may not be justified for every application.

This analysis has provided detailed insights into the specifications, benchmark performance, and cost efficiency of both GPUs. By understanding the nuances of core improvements, memory capacity, and real-world performance, you can better determine which GPU aligns with your specific needs.

Whether you choose the RTX 5090 for its future-proof capabilities or the RTX 4090 for its excellent balance of performance and cost, the key is to base your decision on a thorough evaluation of your workload requirements and budget constraints.

We hope this detailed, objective comparison has provided you with the information you need to make an informed decision about your next GPU upgrade. As AI and deep learning continue to evolve, staying up-to-date with the latest hardware trends is essential for maintaining a competitive edge.

For further reading and technical details, consider exploring additional resources on GPU architecture, performance optimization, and system integration best practices. This knowledge will not only help in your immediate decision-making but will also prepare you for future advancements in the field.

If you’re ready to harness the power of the RTX 5090 or RTX 4090, BIZON can help you tailor a workstation to match your computing needs. From the more compact X5500 and ZX5500 models to the powerhouse Z8000, each system is meticulously engineered to maximize GPU performance for AI and HPC workloads. With flexible configurations in CPU, memory, and cooling solutions, BIZON workstations can be scaled to accommodate advanced research, large-scale model training, real-time analytics, or any AI-driven innovation on the horizon.

Thank you for taking the time to review this in-depth analysis. Making the right hardware choice is critical, and we encourage you to assess your specific needs carefully. The data and insights provided here aim to empower you to choose the GPU—and the workstation—that will best support your projects and long-term goals.

Make an informed decision – evaluate your requirements, compare the facts, and choose the GPU that meets your needs.