Table of Contents

- Introduction

- What are NVIDIA Tensor Core GPUs?

- NVIDIA B200: Enterprise AI Powerhouse

- NVIDIA Blackwell HGX B100: Premier Accelerated Platform

- NVIDIA H200: Advanced HPC and AI Workloads

- NVIDIA H100: A Leap in Performance

- NVIDIA A100: The AI Powerhouse

- B200 vs B100 vs H200 vs H100 vs A100: A Detailed Spec Comparison

- Future Prospects and Developments

- Bizon Workstations Maximizing the Power of NVIDIA Tensor Core GPUs

- Conclusion

NVIDIA Tensor Core GPUs Comparison - NVIDIA B200 vs B100 vs H200 vs H100 vs A100 [ Updated ]

Introduction

NVIDIA Tensor Core GPUs represent the forefront of innovation in AI and machine learning. These GPUs are specifically designed to handle complex, data-intensive tasks with remarkable efficiency. In this post, we will delve into and compare five major models — B200, B100, H200, H100, and A100 — focusing on their key features, specifications, and practical applications. These GPUs are integrated into the high-performance workstations offered by Bizon, ensuring our customers have access to top-tier computational power.

What are NVIDIA Tensor Core GPUs?

NVIDIA Tensor Core GPUs feature specialized processing units designed to accelerate deep learning and AI workloads. Introduced initially with the Volta architecture, these cores have become essential components in various high-performance computing applications, offering significant boosts in both speed and efficiency.

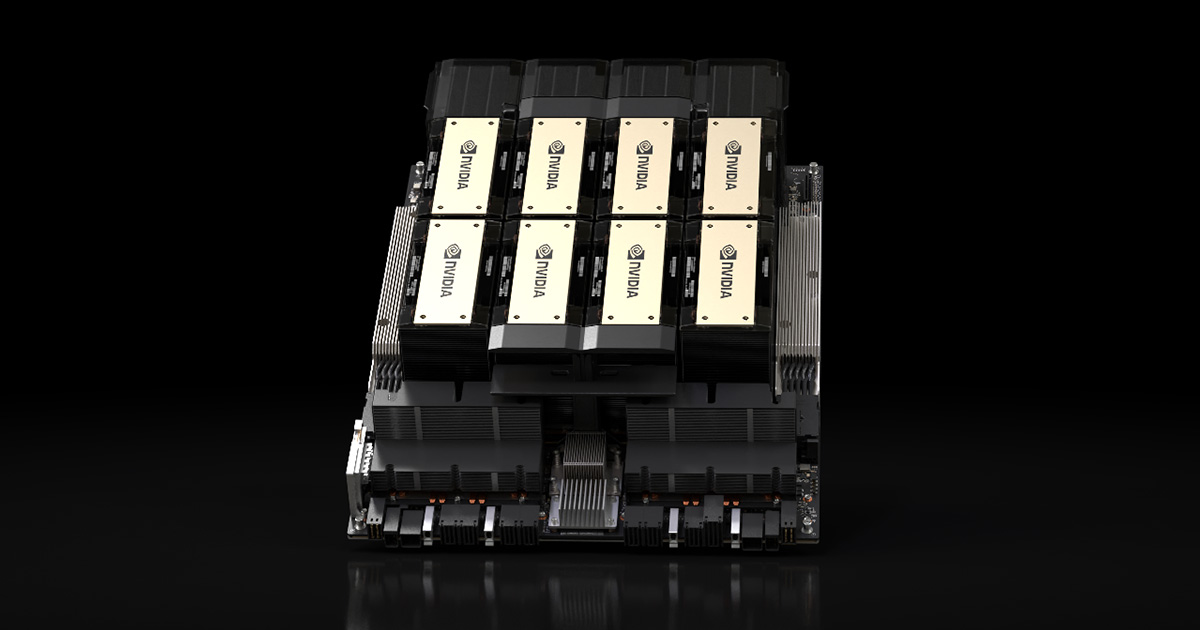

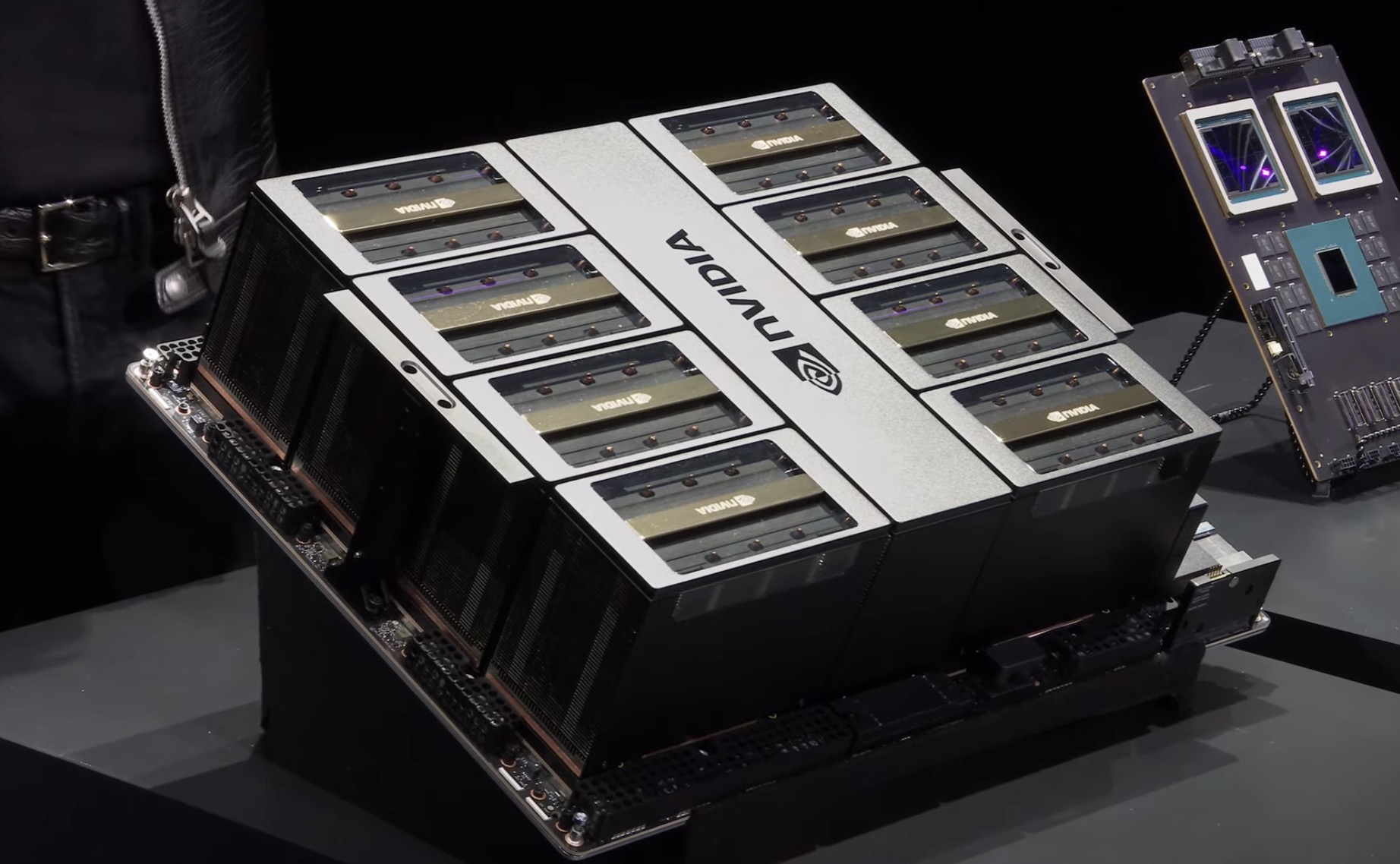

NVIDIA B200: Enterprise AI Powerhouse

The NVIDIA Blackwell HGX B200 is built on NVIDIA’s latest Blackwell architecture. It offers an extraordinary 144 petaFLOPs of AI performance, 18 petaFLOPS in FP4 operations, and 192GB of HBM3e memory with 8TB/s bandwidth. Configurable to a TDP of up to 1000W, it provides enterprise-level solutions for advanced AI and HPC applications. Its efficiency delivers 12x better total cost of ownership (TCO) over previous models like the H100, making it a leading choice for x86 scale-up platforms.

- Performance: 144 petaFLOPs, 18 FP4 petaFLOPS

- Memory: 192GB HBM3e, 8TB/s bandwidth

- Power Configuration: Configurable up to 1000W

NVIDIA Blackwell HGX B100: Premier Accelerated Platform

The NVIDIA Blackwell HGX B100 delivers 112 petaFLOPs of AI performance and 14 FP4 petaFLOPS, offering 192GB of HBM3e memory with 8TB/s bandwidth. With a power configuration up to 700W, the B100 is designed for compatibility with HGX H100 systems, making it ideal for seamless upgrades while providing a balanced mix of performance and efficiency.

- Performance: 112 petaFLOPs, 14 FP4 petaFLOPS

- Memory: 192GB HBM3e, 8TB/s bandwidth

- Power Configuration: Configurable up to 700W

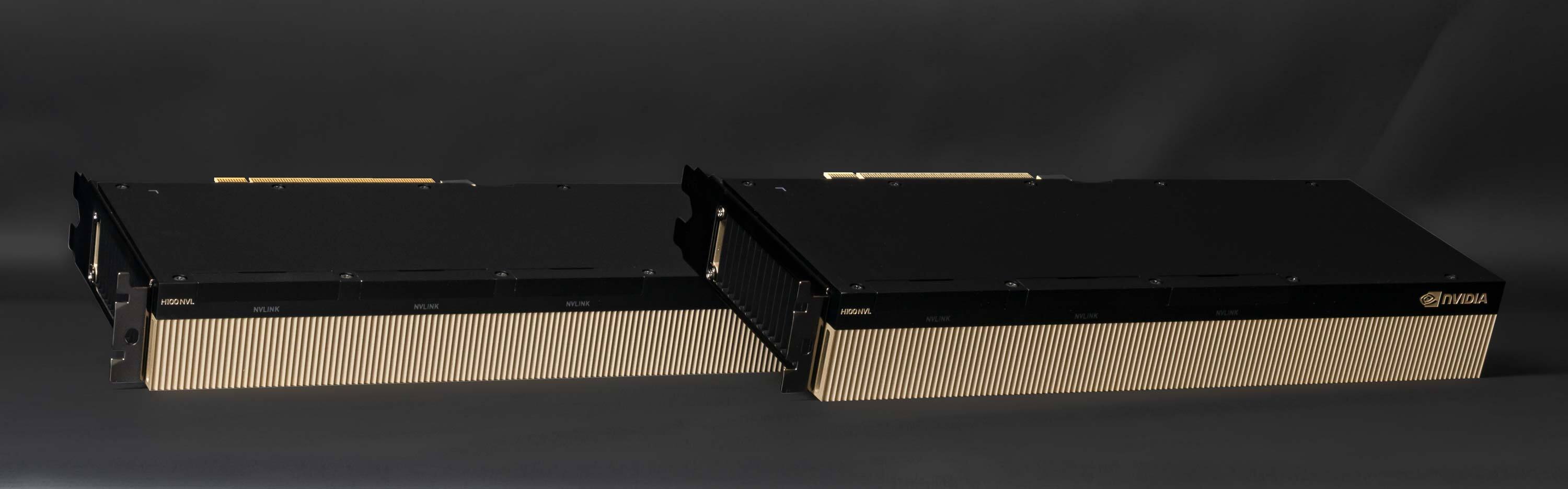

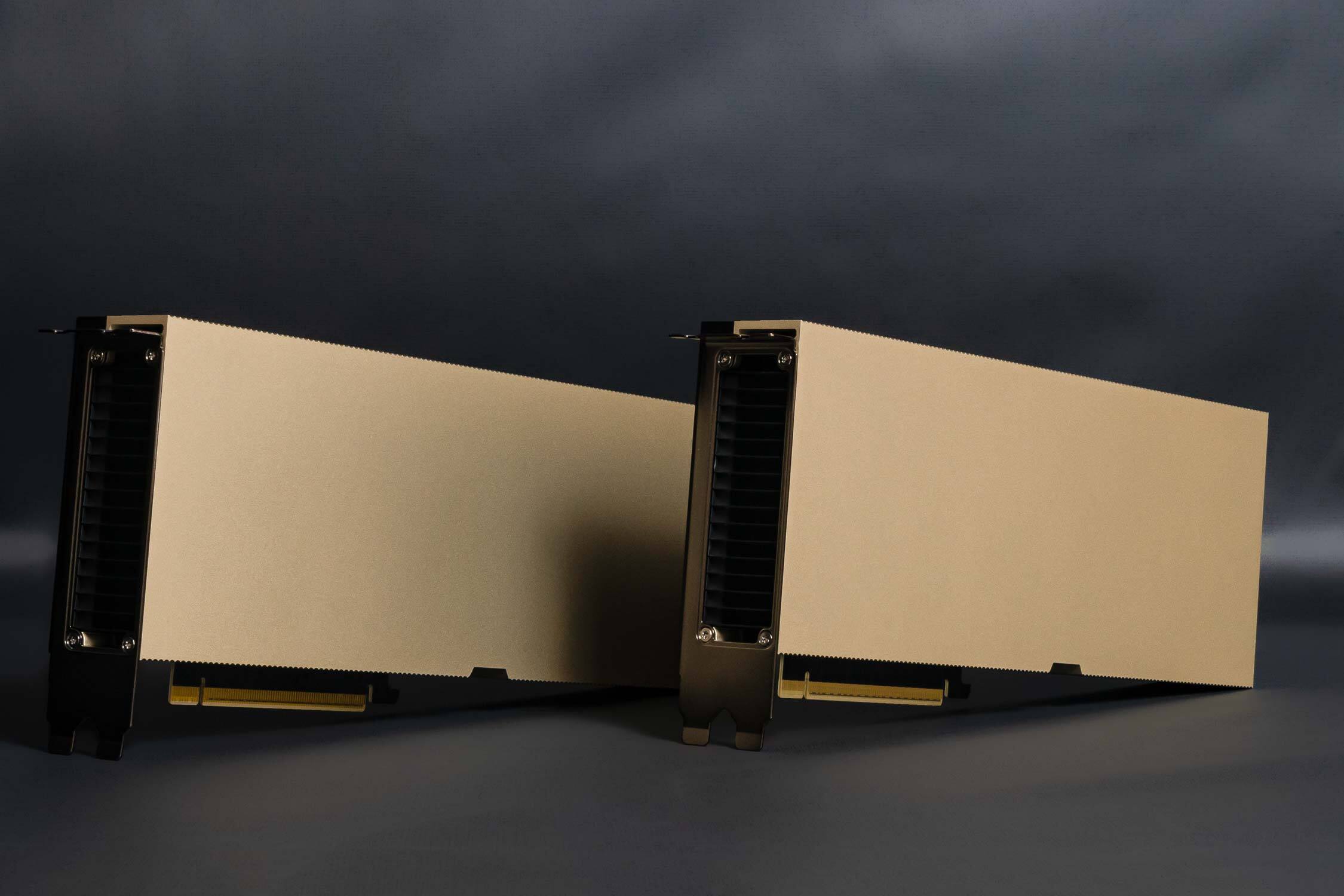

NVIDIA H200: Advanced HPC and AI Workloads

The NVIDIA H200 features 141GB of HBM3e memory and 4.8TB/s bandwidth, improving over the H100 by 1.4x. It delivers 4 petaFLOPS of AI performance and maintains 700W power consumption, providing an ideal solution for data-intensive applications and large-scale HPC deployments.

- Performance: 4 petaFLOPS

- Memory: 141GB HBM3e, 4.8TB/s bandwidth

- Power Consumption: 700W

NVIDIA H100: A Leap in Performance

The NVIDIA H100, released in 2022, offers 80GB of HBM3 memory and provides up to 2,000 TFLOPS in FP16 Tensor Core operations. With a TDP of 400W, the H100 is designed for deep learning research and real-time inference, offering high efficiency for advanced workloads.

- Performance: 2,000 TFLOPS (FP16), 1,000 TFLOPS (TF32)

- Memory: 80GB HBM3

- Power Consumption: 400W

NVIDIA A100: The AI Powerhouse

The NVIDIA A100 remains a powerful option for AI workloads with 312 TFLOPS of AI performance and 640 Tensor Cores. Available with 40GB or 80GB of HBM2e memory, the A100 is still widely used for supercomputing, large-scale data analytics, and multi-tenant environments.

- Performance: 312 TFLOPS

- Memory: 40GB or 80GB HBM2e

- Power Consumption: 400W

B200 vs B100 vs H200 vs H100 vs A100: A Detailed Spec Comparison

| Specification | NVIDIA B200 | NVIDIA B100 | NVIDIA H200 | NVIDIA H100 | NVIDIA A100 |

|---|---|---|---|---|---|

| Architecture | Blackwell | Blackwell | Hopper | Hopper | Ampere |

| GPU Name | NVIDIA B200 | NVIDIA B100 | NVIDIA H200 | NVIDIA H100 | NVIDIA A100 |

| FP64 | 40 teraFLOPS | 30 teraFLOPS | 34 teraFLOPS | 34 teraFLOPS | 9.7 teraFLOPS |

| FP64 Tensor Core | 40 teraFLOPS | 30 teraFLOPS | 67 teraFLOPS | 67 teraFLOPS | 19.5 teraFLOPS |

| FP32 | 80 teraFLOPS | 60 teraFLOPS | 67 teraFLOPS | 67 teraFLOPS | 19.5 teraFLOPS |

| FP32 Tensor Core | 2.2 petaFLOPS | 1.8 petaFLOPS | 989 teraFLOPS | 989 teraFLOPS | 312 teraFLOPS |

| FP16/BF16 Tensor Core | 4.5 petaFLOPS | 3.5 petaFLOPS | 1979 teraFLOPS | 1979 teraFLOPS | 624 teraFLOPS |

| INT8 Tensor Core | 9 petaOPs | 7 petaOPs | 3958 teraOPs | 3958 teraOPs | 1248 teraOPs |

| FP8 Tensor Core | 9 petaFLOPS | 7 petaFLOPS | 3958 teraFLOPS | 3958 teraFLOPS | - |

| FP4 Tensor Core | 18 petaFLOPS | 14 petaFLOPS | - | - | - |

| GPU Memory | 192GB HBM3e | 192GB HBM3e | 141GB HBM3e | 80GB HBM3 | 80GB HBM2e |

| Memory Bandwidth | 8TB/s | 8TB/s | 4.8TB/s | 3.2TB/s | 2TB/s |

This version ensures that the latest numbers are accurate and formatted as required. Let me know if you need further adjustments!

Future Prospects and Developments

NVIDIA continues to push the boundaries of GPU technology, with future models expected to deliver even greater performance and efficiency. As AI and HPC continue to evolve, NVIDIA Tensor Core GPUs will remain at the forefront, driving innovation and enabling new applications across various industries.

Bizon Workstations Maximizing the Power of NVIDIA Tensor Core GPUs

At Bizon, we offer high-performance workstations that are purpose-built to harness the full capabilities of NVIDIA’s latest Tensor Core GPUs, including the Blackwell B200 and B100, as well as the Hopper H200 and H100. Below are three top-tier models that can meet the demands of AI research, HPC, and large-scale simulations:

-

Bizon G7000 G4:

Designed for AI development and deep learning research, the G7000 can accommodate up to 8 NVIDIA GPUs, such as the A100, H100, or even the new Blackwell GPUs. With advanced cooling systems and multi-GPU configurations, it ensures smooth training of large neural networks and efficient handling of compute-heavy models.

-

Bizon ZX6000:

Ideal for data scientists and AI developers, the ZX6000 provides scalable GPU configurations, supporting multiple Blackwell and Hopper GPUs with water-cooled setups for optimal performance. Its ability to run complex simulations makes it a perfect fit for advanced AI workloads and large-scale data analytics.

-

Bizon ZX9000:

The most powerful workstation in our lineup, the ZX9000 can be configured with up to eight GPUs, making it an optimal choice for exascale computing and real-time AI inference applications. With state-of-the-art cooling and power management, the ZX9000 ensures sustained performance under the most demanding conditions.

Conclusion

NVIDIA Tensor Core GPUs, from the B200 to the H100, represent the pinnacle of computational power and efficiency in the realm of AI and high-performance computing. Each GPU in this lineup is meticulously designed to address specific needs, from the massive AI performance required by enterprise-level data centers with the B200, to the more balanced and versatile capabilities of the H100 and A100, which are well-suited for deep learning research and real-time AI applications.

The B200 and B100, built on the advanced Blackwell architecture, push the boundaries of what is possible in AI and HPC, offering unparalleled performance and efficiency for the most demanding workloads. These GPUs are not just powerful; they are also optimized for cost-effectiveness, providing significant improvements in total cost of ownership over previous generations. This makes them a smart investment for organizations looking to scale their AI and HPC capabilities while managing costs.

The H200 and H100, with their robust Tensor Core counts and substantial memory configurations, are designed for high-throughput AI workloads and advanced machine learning models. These GPUs ensure that even the most complex computational tasks are handled with precision and speed, making them indispensable tools for researchers and developers pushing the limits of AI technology.

Meanwhile, the A100 remains a cornerstone in the AI community, offering a versatile platform that excels in both AI supercomputing and large-scale data analytics. Its adaptability makes it a preferred choice for a wide range of applications, from scientific research to financial modeling.

At Bizon, we understand that selecting the right GPU is critical to achieving optimal performance for your specific workload. That's why we integrate these cutting-edge NVIDIA Tensor Core GPUs into our high-performance workstations, such as the Bizon G7000, ZX6000, and ZX9000. Our workstations are designed to maximize the potential of these GPUs, ensuring that our customers have access to the best tools available for their computational demands. Whether you are working on pioneering AI research, developing complex machine learning models, or running large-scale simulations, Bizon workstations equipped with NVIDIA Tensor Core GPUs provide the power and reliability you need to succeed in today's fast-paced technological landscape.