Table of Contents

What is the Best GPU for Data Science in 2025?

GPUs (graphics processing units) are extremely important for data science and deep learning. These processors were originally designed for rendering graphics, but have become vital for artificial intelligence and analyzing large data sets. This article will explain why GPUs are so useful for data science work.

We'll look at how GPUs are built in a way that allows them to perform many complex calculations in parallel, much faster than traditional CPUs. This massive parallel processing power makes GPUs ideal for training deep learning models and processing huge amounts of data efficiently. The article will cover the key features of GPUs that enable these capabilities for data science applications.

Choosing the Right GPU for Data Science

When it comes to selecting a GPU for data science tasks, there are several key factors to consider. The right choice can significantly impact the performance and efficiency of your workloads.

One of the most crucial considerations is the computational requirements of your specific use case. The number of CUDA cores, which are the parallel processing units within the GPU, plays a vital role in determining the speed at which computations can be performed. Additionally, the amount of memory available on the GPU is crucial for handling large datasets and complex models.

Power consumption and thermal management are also important factors to consider. Data science workloads can be computationally intensive, and GPUs with higher performance tend to consume more power and generate more heat. Ensuring that your system can handle the power requirements and provide adequate cooling is essential for stable and efficient operation.

Finally, your performance targets should guide your GPU selection. If you're primarily focused on training deep learning models, you'll want a GPU that can minimize training time. On the other hand, if you're more interested in deploying trained models for inference, you'll prioritize GPUs that can deliver rapid inference speeds.

As of May 2024, the best GPUs for data science are primarily from NVIDIA, with some strong entries from AMD as well. Here are some of the top choices:

-

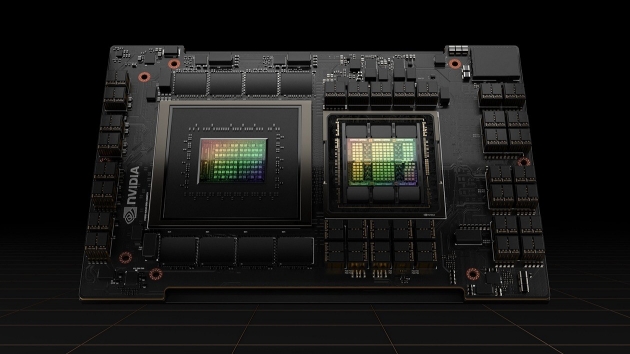

NVIDIA H100: This is one of the latest and most powerful GPUs specifically designed for AI and data science applications. It features 16,896 CUDA Cores and 80GB of HBM3 memory, making it ideal for handling large datasets and complex models in enterprise environments.

-

NVIDIA A100: Known for its versatility and power, the A100 offers excellent performance with 6,912 CUDA Cores and up to 80GB of HBM2e memory. It's a top choice for deep learning and large-scale data science tasks.

-

NVIDIA RTX 4090: This GPU, built on the Ada Lovelace architecture, provides 16,384 CUDA Cores and 24GB of GDDR6X memory. It's suitable for smaller-scale tasks and is popular among hobbyists and small businesses.

-

NVIDIA RTX A6000: Offering a good balance of performance and cost, the A6000 comes with 10,752 CUDA Cores and 48GB of GDDR6 memory. It's an excellent choice for medium-scale data science projects.

-

AMD MI300X: This GPU features 19,456 stream processors and 192GB of HBM3 memory, making it a powerful option for high-performance computing tasks. It's gaining popularity in the data science community for its overall performance.

When selecting a GPU, consider the specific requirements of your data science tasks, including memory size, core count, clock speed, and power consumption. The best choice depends on the scale of your projects and your budget.

What Data Science Solves?

Data science is a broad field that encompasses various techniques and methods for extracting insights and knowledge from data. It finds applications in diverse areas, ranging from business analytics to scientific research.

One of the primary applications of data science is machine learning, which involves developing algorithms and models that can learn from data and make predictions or decisions without being explicitly programmed. Deep learning, a subset of machine learning inspired by the structure and function of the human brain, has been particularly successful in areas such as computer vision, natural language processing, and speech recognition.

These data science techniques are driving advancements in artificial intelligence (AI), enabling systems to perform tasks that would typically require human intelligence, such as visual perception, decision-making, and language understanding.

Data science is used to solve a wide range of problems, including:

- Predictive maintenance in manufacturing and industrial settings

- Fraud detection in financial transactions

- Personalized recommendations for e-commerce and entertainment platforms

- Autonomous driving and advanced driver assistance systems

- Medical image analysis and disease diagnosis

- Sentiment analysis and opinion mining from social media data

By leveraging the power of data, algorithms, and computing resources, data science provides valuable insights and solutions across various industries and domains.

What to Look for in a GPU for Data Science

Performance Metrics

When evaluating GPUs for data science workloads, several performance metrics come into play. These metrics directly impact the speed and efficiency of your computations.

CUDA cores and stream processors: CUDA cores, also known as stream processors, are the fundamental building blocks of a GPU's parallel processing capabilities. A higher number of CUDA cores generally translates to better performance for highly parallel workloads, such as those encountered in deep learning and data processing.

Memory bandwidth and capacity: The memory bandwidth determines how quickly data can be transferred between the GPU's memory and its processing units. Higher memory bandwidth is crucial for data-intensive tasks, as it ensures that the GPU's computational resources are consistently fed with data, preventing bottlenecks. Additionally, the memory capacity determines the size of datasets and models that can be processed efficiently on the GPU.

Tensor cores (for AI workloads): Tensor cores are specialized processing units designed specifically for accelerating tensor operations, which are the fundamental building blocks of deep learning models. GPUs equipped with tensor cores can significantly boost the performance of AI and machine learning workloads, enabling faster training and inference times.

Power Efficiency

In addition to raw performance, power efficiency is an important consideration when selecting a GPU for data science tasks. High-performance GPUs can be energy-hungry, and managing power consumption and heat dissipation is crucial for optimal operation and longevity.

Thermal Design Power (TDP): The Thermal Design Power (TDP) is a measure of the maximum amount of heat a GPU is expected to generate under normal operating conditions. A lower TDP typically indicates a more power-efficient GPU, which can translate to lower energy costs and reduced cooling requirements.

Energy efficiency considerations: Manufacturers often incorporate energy-efficient technologies into their GPUs to optimize power consumption without compromising performance. These may include advanced power management features, specialized low-power modes, and efficient voltage regulation techniques. Evaluating the energy efficiency of a GPU can help strike a balance between performance and power consumption, ensuring reliable operation and reducing environmental impact.

Software and Driver Support

Seamless integration with software tools and driver support is crucial for harnessing the full potential of a GPU in data science applications. Compatibility with popular frameworks and libraries can greatly impact productivity and ease of development.

CUDA toolkit and cuDNN compatibility: NVIDIA's CUDA toolkit provides a comprehensive development environment for leveraging the parallel computing capabilities of NVIDIA GPUs. It includes libraries, debugging tools, and optimization techniques tailored for GPU-accelerated applications. Additionally, the cuDNN library offers highly optimized implementations of deep learning primitives, enabling efficient training and inference on NVIDIA GPUs.

Driver support and updates: Regular driver updates from GPU manufacturers are essential for ensuring optimal performance, stability, and compatibility with the latest software releases. Well-supported drivers can provide bug fixes, performance optimizations, and enhanced functionality, enabling seamless integration of GPUs into data science workflows.

Cost and Availability

While performance and features are paramount when selecting a GPU for data science, cost and availability also play a significant role in the decision-making process. GPUs can vary widely in price, and striking a balance between capabilities and budget is crucial.

Price ranges for different GPU models: GPU prices can range from a few hundred dollars for entry-level models to thousands of dollars for high-end, enterprise-grade solutions. It's essential to evaluate the performance requirements of your data science workloads and balance them against your budget constraints. Often, mid-range GPUs can offer a sweet spot of performance and value for many data science applications.

Availability and supply chain factors: The availability of GPUs can be influenced by various supply chain factors, such as manufacturing capacities, global demand, and supply disruptions. Checking the availability of your desired GPU model from reputable vendors and considering potential lead times is advisable to ensure timely procurement and uninterrupted workflows.

Recommended GPUs in 2024 for Data Science

High-Performance Multi-GPU Solutions

For demanding data science workloads that require exceptional computational power, Bizon offers several high-end multi-GPU solutions that leverage the latest NVIDIA GPUs and AMD EPYC CPUs.

BIZON ZX9000 – Water-cooled 8 GPU Server

If you need unparalleled performance for training large-scale deep learning models, simulations, or high-performance computing workloads, the BIZON ZX9000 is an excellent choice. Powered by up to 8 water-cooled NVIDIA RTX A6000, A100, or H100 GPUs and dual AMD EPYC 7003/9004 CPUs with up to 256 cores, this server delivers exceptional computational power with up to 8 TB of memory.[1]

The water-cooling system ensures efficient thermal management, enabling stable and reliable operation even under intense computational loads. Additionally, the modular design with quick disconnect technology allows for easy GPU expandability, making this server a scalable and future-proof solution.

BIZON ZX6000 G2 – Water-cooled Dual EPYC Workstation

For those seeking a balance between performance and cost-effectiveness, the BIZON ZX6000 G2 is a compelling option. Equipped with up to 4 NVIDIA GPUs (A100, RTX 4090, 4080, A6000, or A5000) and dual AMD EPYC 7003/9004 CPUs with up to 256 cores, this water-cooled workstation offers ample computational power with up to 8 TB of memory.

The water-cooling system ensures efficient thermal management, enabling stable and reliable operation even under intense computational loads. This versatile workstation is suitable for a wide range of data science tasks, from model development to deployment in production environments.

Entry-Level and Compact Solutions

If you're just starting your data science journey or have limited space requirements, Bizon offers air-cooled workstations with powerful GPU options.

Dual GPU Ai Workstation

This compact solution is powered by up to 2 NVIDIA RTX 6000, RTX 5000, RTX 4090, or RTX 4080 GPUs, along with an Intel Core i9-14900K CPU. It offers ample performance for smaller machine learning models, data preprocessing, and basic deep learning tasks, with up to 192 GB of DDR5 memory.

Quad GPU Ai Workstation

For those requiring more GPU power in a compact form factor, the Quad GPU Ai Workstation is an excellent choice. Featuring up to 4 NVIDIA RTX 6000 or 2 RTX 4090/4080 GPUs, paired with an AMD Threadripper PRO CPU with up to 96 cores, this workstation delivers substantial computational capabilities with up to 1 TB of DDR5 memory.

Regardless of your specific requirements, Bizon's range of NVIDIA data science workstations offers scalable solutions to meet your computational needs. From high-end multi-GPU powerhouses to compact entry-level options, their product lineup caters to a wide spectrum of data science use cases and budgets, ensuring efficient and accelerated performance for your workflows.

Conclusion

As we navigate the ever-evolving landscape of data science, the pivotal role of GPUs in driving innovation and accelerating insights cannot be overstated. From deep learning model training to large-scale data processing, the parallel computing capabilities of these specialized processors have revolutionized the way we approach complex computational challenges.

Throughout this exploration, we have delved into the intricate factors that shape the selection of an optimal GPU for data science tasks. Performance metrics such as CUDA cores, memory bandwidth, and tensor cores have emerged as critical considerations, directly impacting the speed and efficiency of our workloads. Additionally, power efficiency, software compatibility, and cost-effectiveness have proven to be equally important factors in ensuring a well-rounded and sustainable GPU solution.

Looking ahead, the future of GPU technology promises even more exciting developments. Advancements in semiconductor manufacturing processes, architectural innovations, and the integration of emerging technologies like quantum computing and neuromorphic processors could further propel the capabilities of GPUs, unlocking new frontiers in data science and artificial intelligence.

As we chart our course through this thrilling era of technological progress, it is essential to carefully evaluate our specific needs, balancing performance requirements with budgetary constraints and long-term scalability. Whether you're a data science enthusiast, a researcher, or a business professional, the right GPU can be the catalyst that transforms your vision into reality, empowering you to unlock the boundless potential of data and drive meaningful change in the world around us.

Based on the information provided about Bizon's NVIDIA data science workstations, here are my recommendations tailored to the article's content: