Table of Contents

- Local deep learning workstation 10x times cheaper than web based services

- Customize your computer for deep learning

- Create your own computer

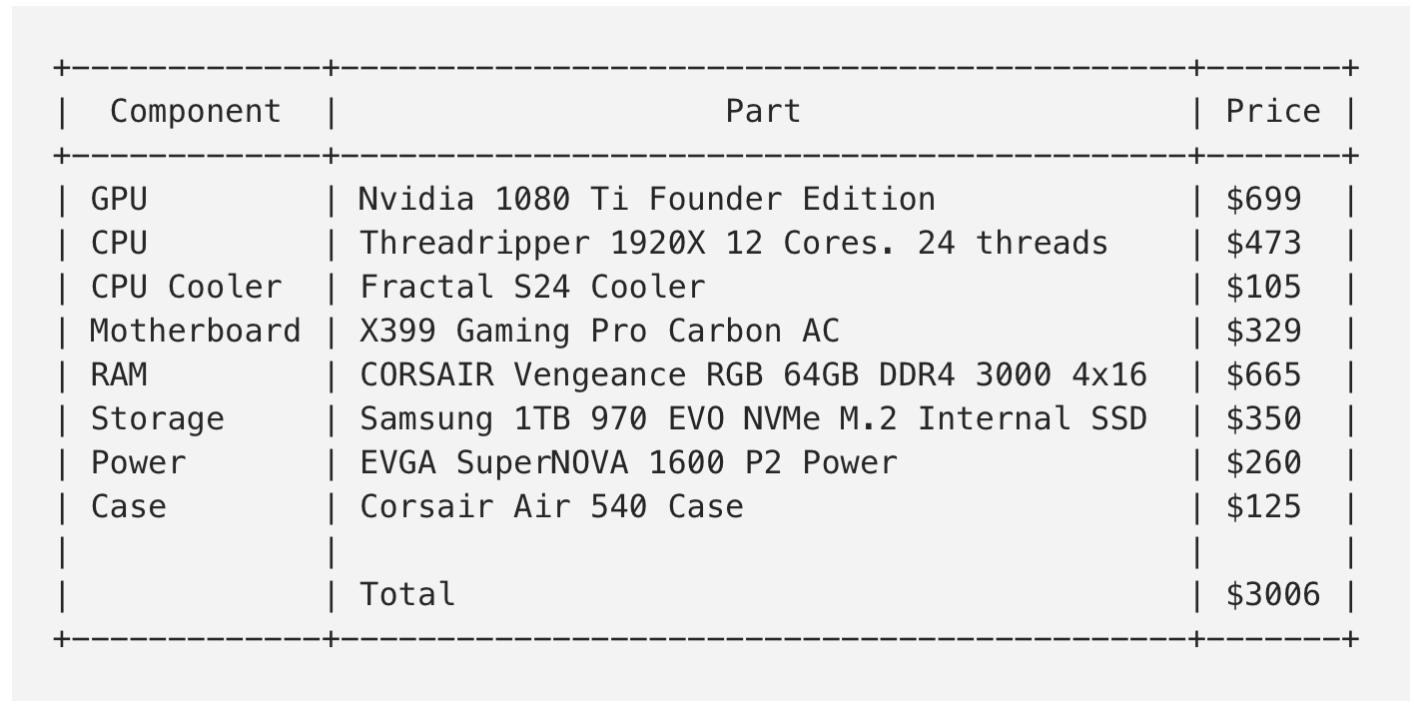

- Cost of components

- Save with a your own deep learning PC

- Pre-builds save money

- Cloud based costs more

- Breaking even with a pre-build

- GPU Performance on par with AWS

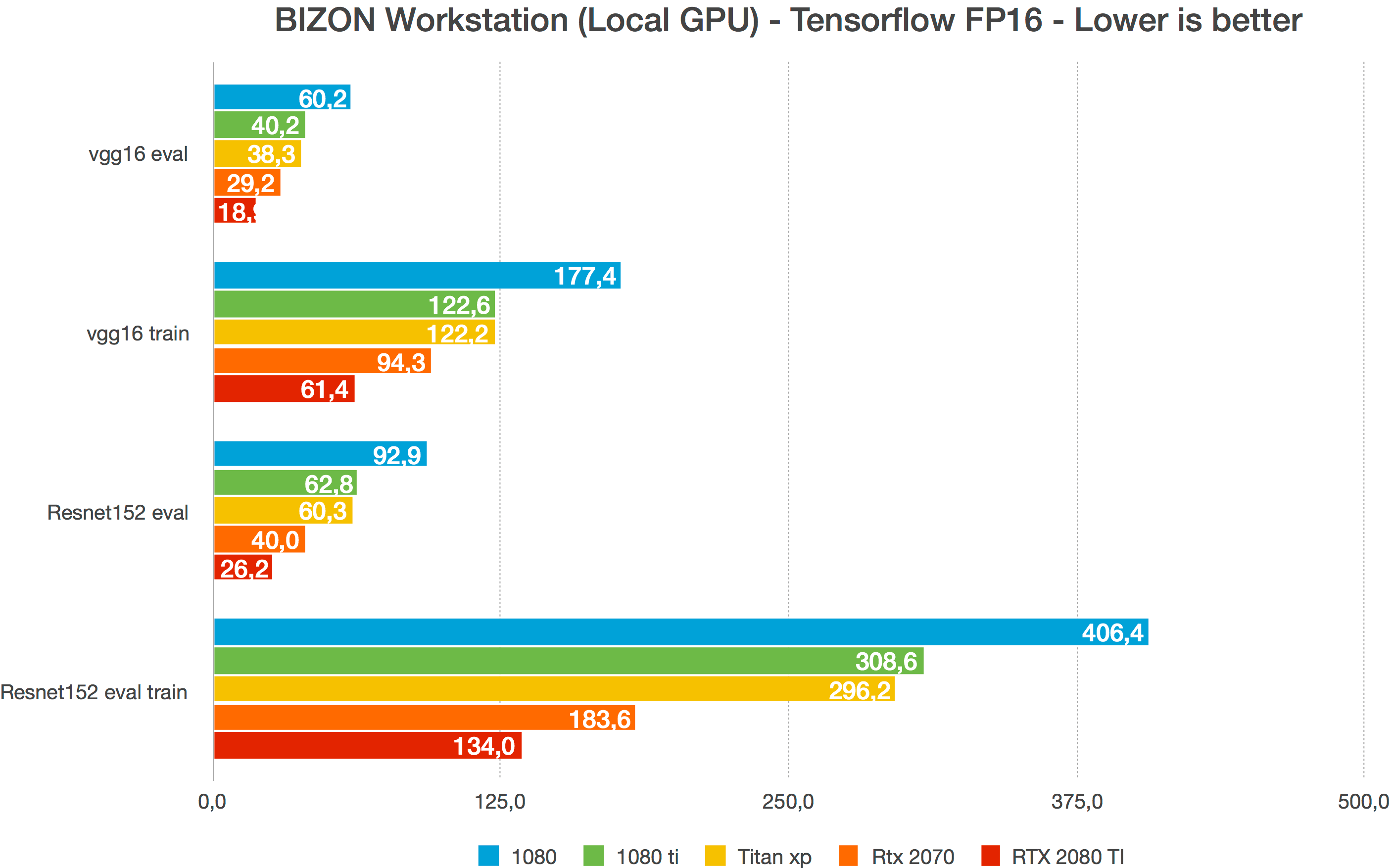

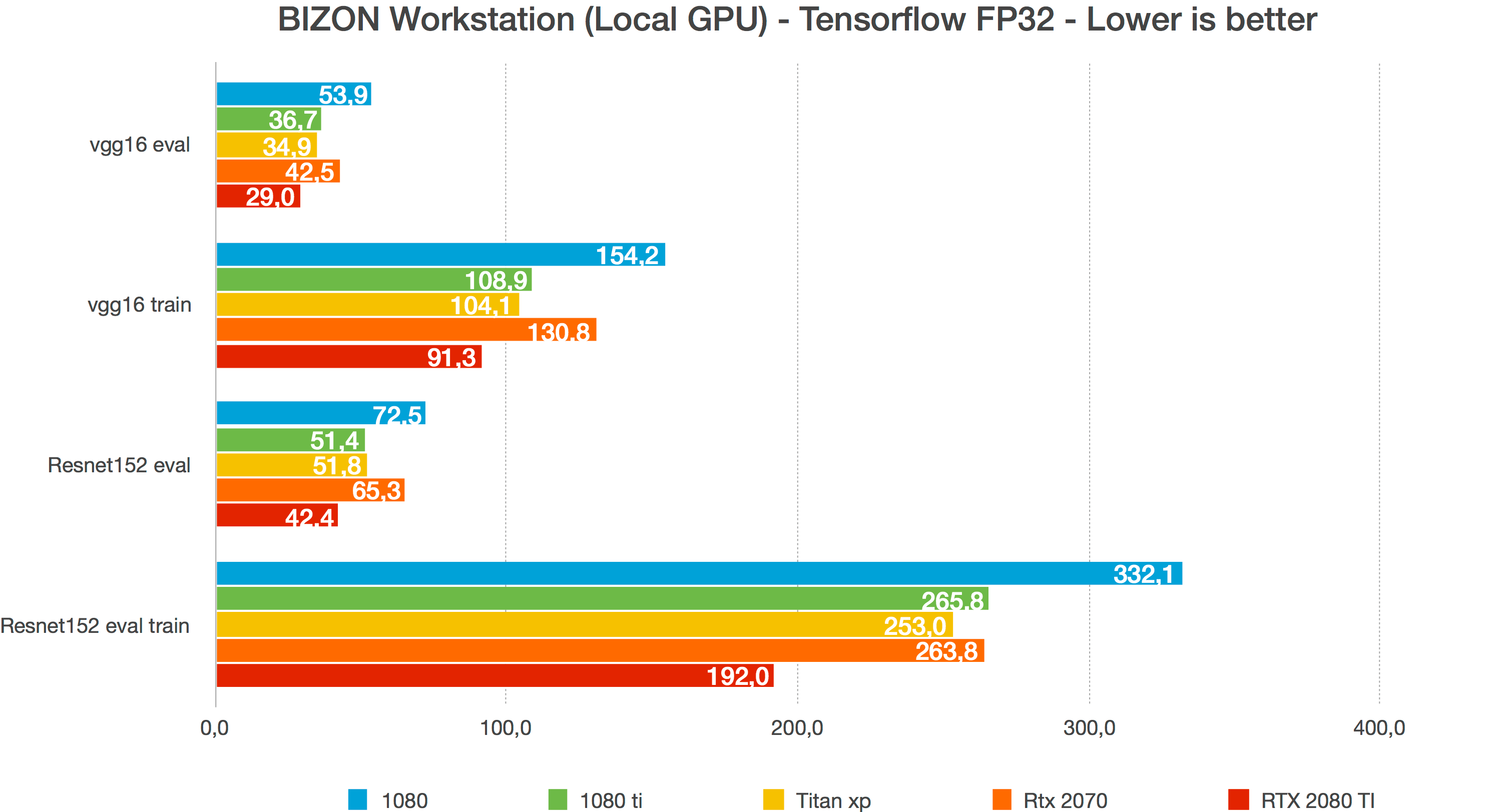

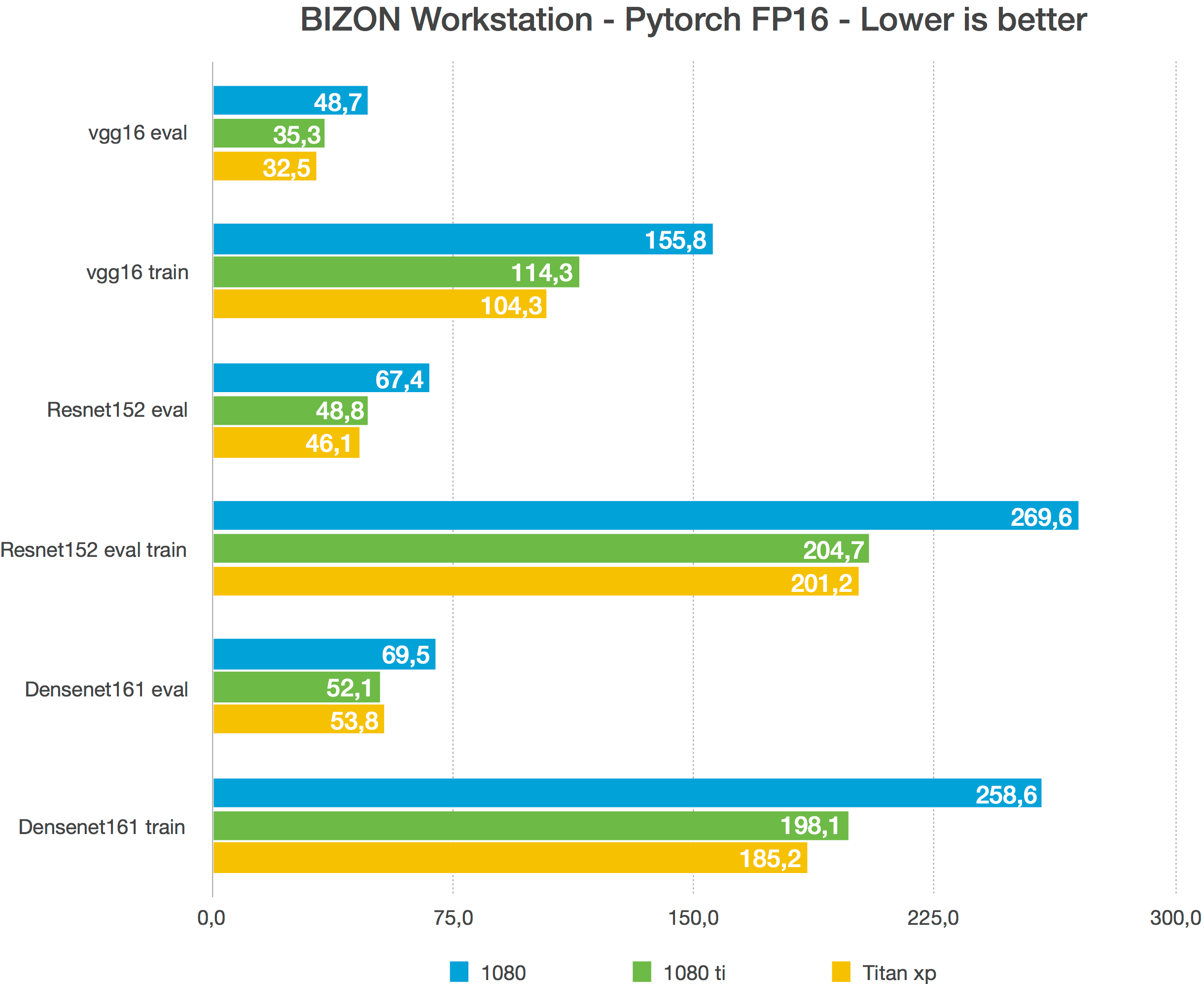

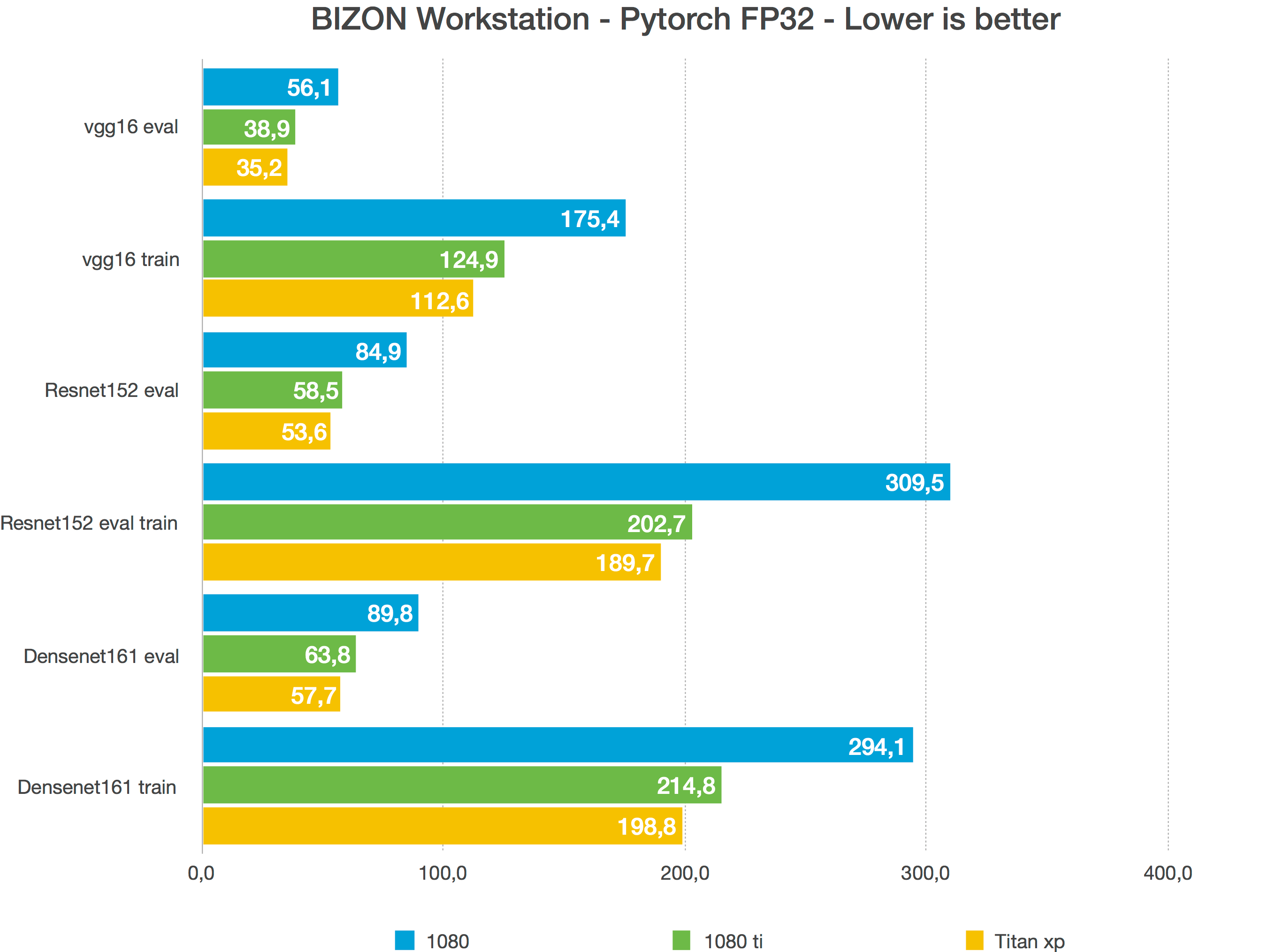

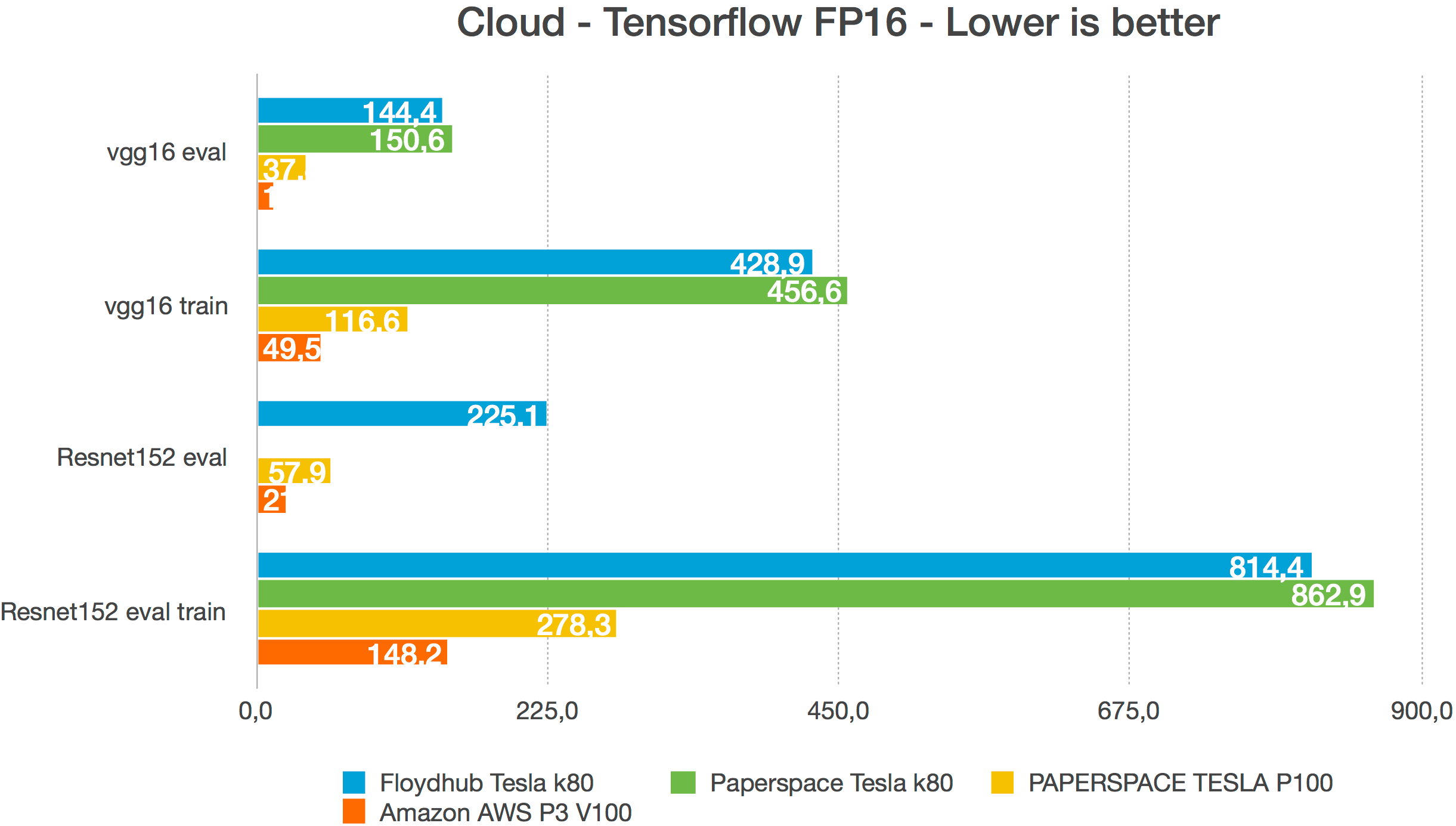

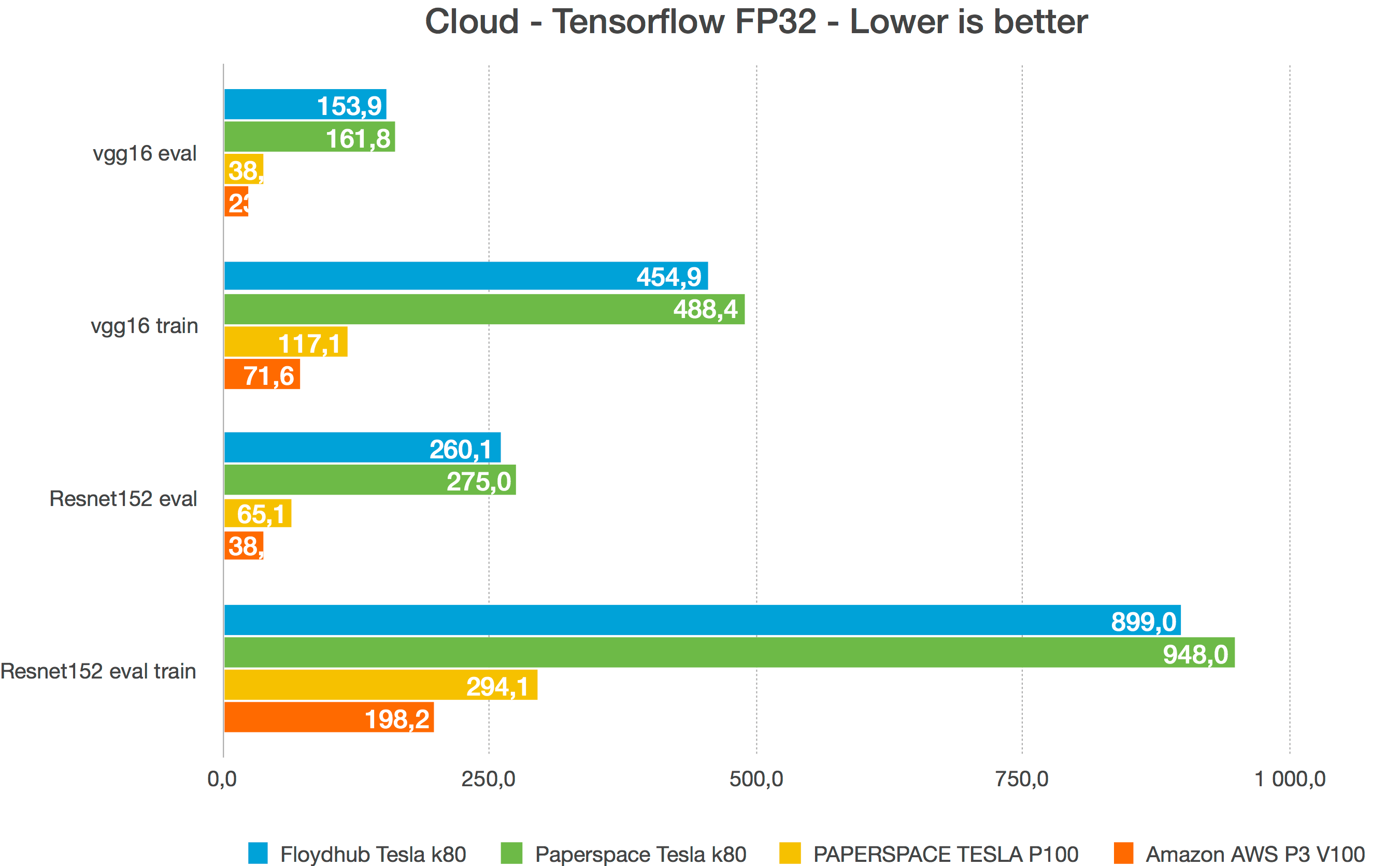

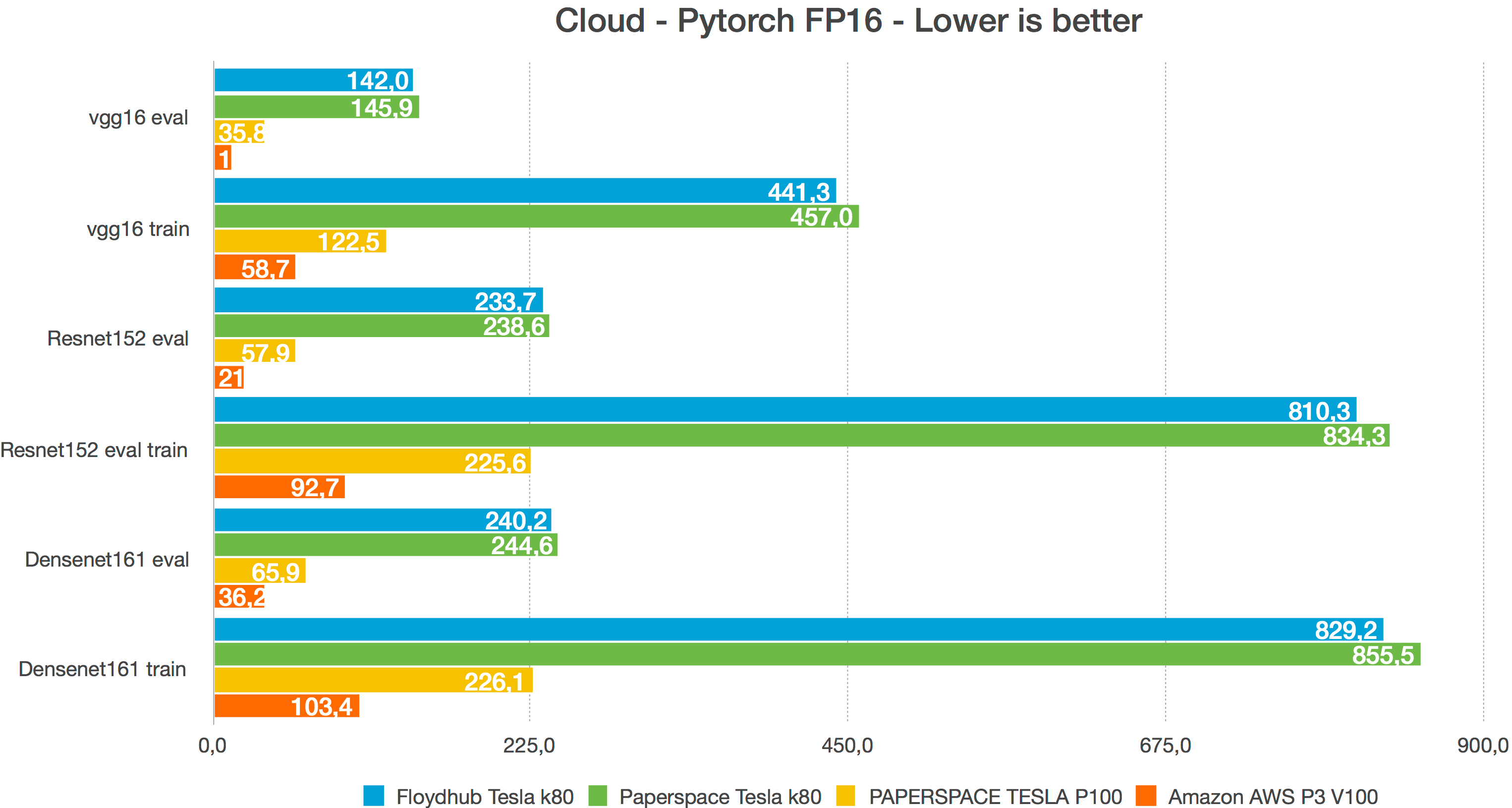

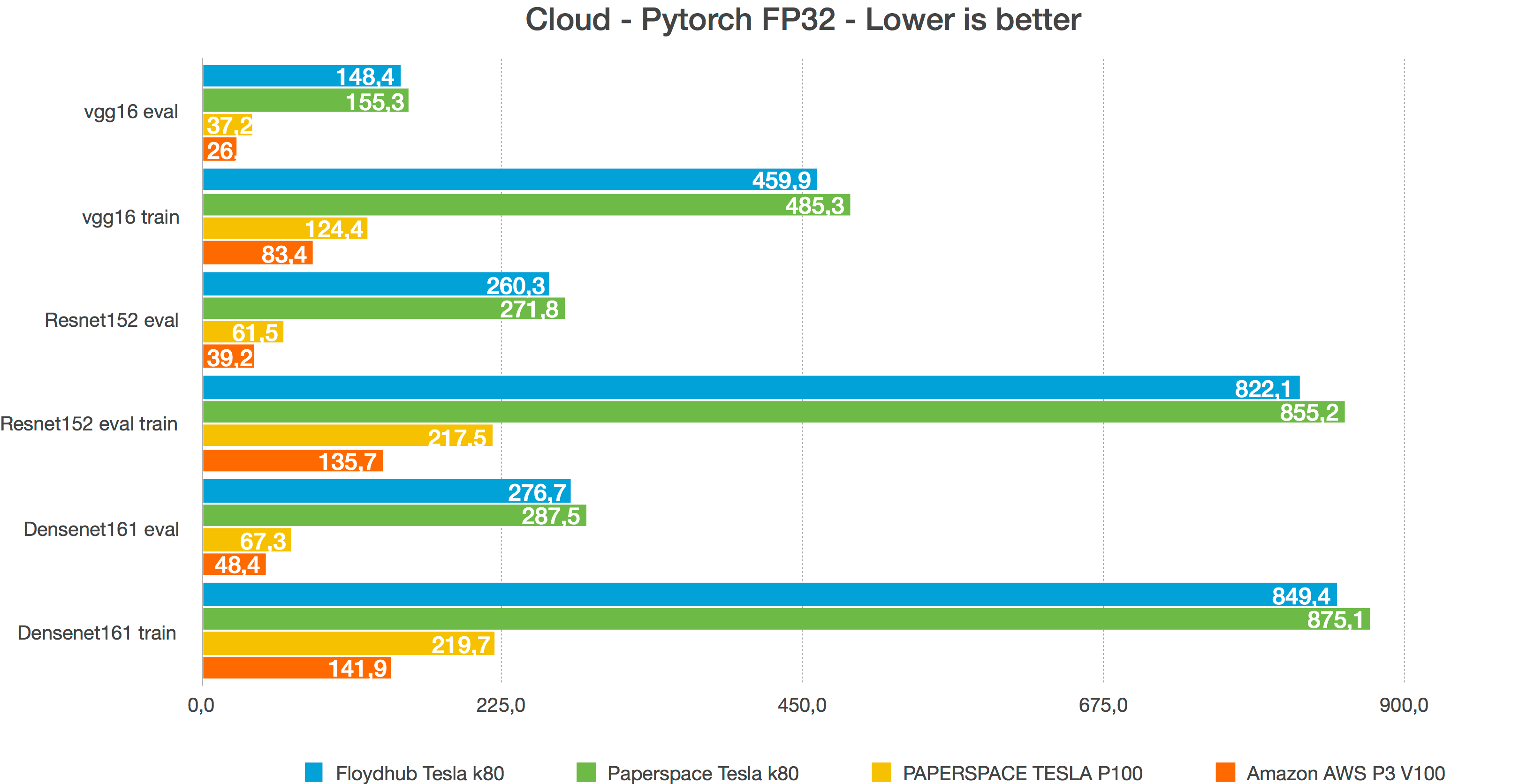

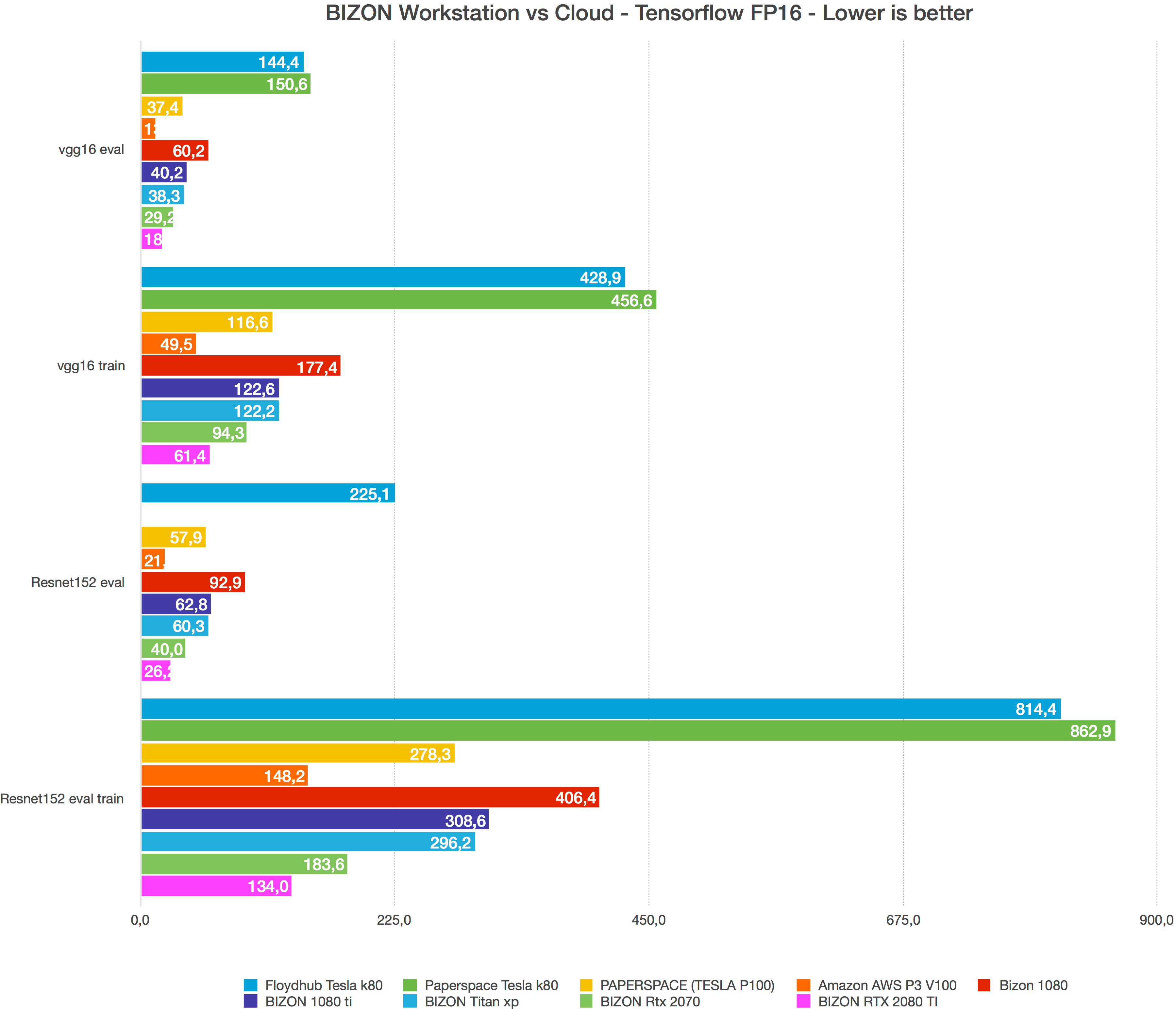

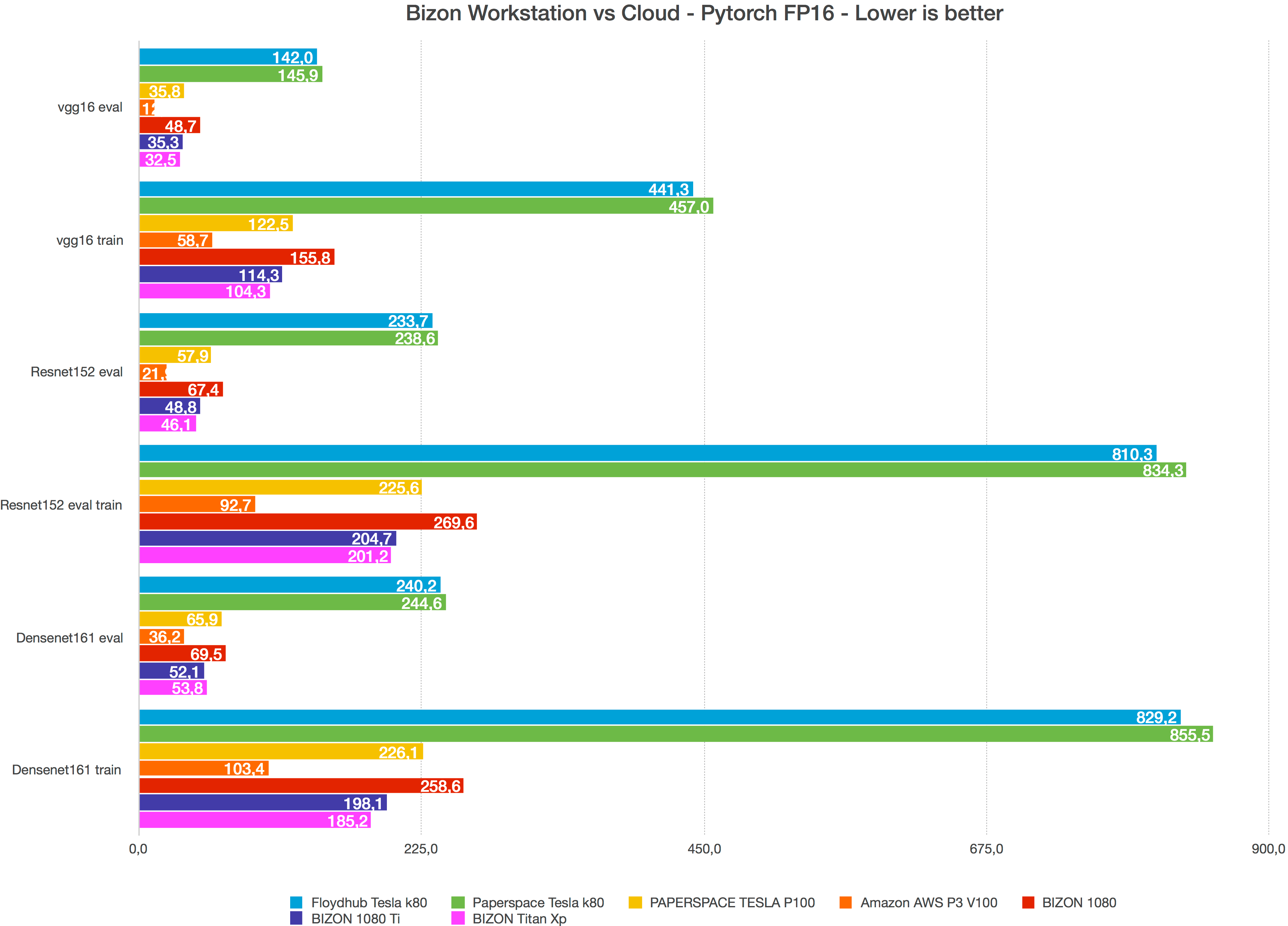

- Benchmarks

- Why AWS is so expensive

- Pick parts for optimum performance

- FAQ

Prebuilt vs Building your own Deep Learning Machine vs GPU Cloud (AWS)

If you’ve used or considered using Amazon’s Web Services, Azure, or GCloud for machine learning, you’ll have a good understanding of how costly it is to get graphics processing unit (GPU) time.

Turning machines on and off can also cause major disruption to your workflow. However, there is a better more cost effective solution for deep learning; buy your own local deep learning computer. Not only is it 10x times cheaper it will be easier to use and you can customize it to meet your own specific requirements.

In this article we explore the financial benefits of buying your own computer for deep learning.

You can buy a 1 x NVIDIA 1080 Ti GPU deep learning PC starting at $3k. This will include 8 Core CPU, 32 GB RAM and NVIDIA GTX 1080 Ti. This is the best GPU in terms of price/performance.

Check the links below:

1x GPU Bizon V3000: More details

2x–4x GPU Bizon G3000: More details

However, if you’re going for the 2080 Ti it’s best if you get one with a blower fan, a 12 Core CPU, 64GB RAM, and 1TB M.2 SSD.

You can compare it with the building on your own and you’ll get about the same price.

The huge advantage of buying a prebuilt workstation is that you don’t need to find the right components, putting components together, testing, configuring system, installing an operating system and all the deep learning frameworks. Also, you covered by warranty and phone technical support from deep learning experts.

BIZON Plug-and-play workstations take you from power-on to deep learning in minutes.

Every BIZON workstation comes with preinstalled deep learning frameworks (TensorFlow, Keras, PyTorch, Caffe, Caffe 2, Theano, CUDA, and cuDNN).

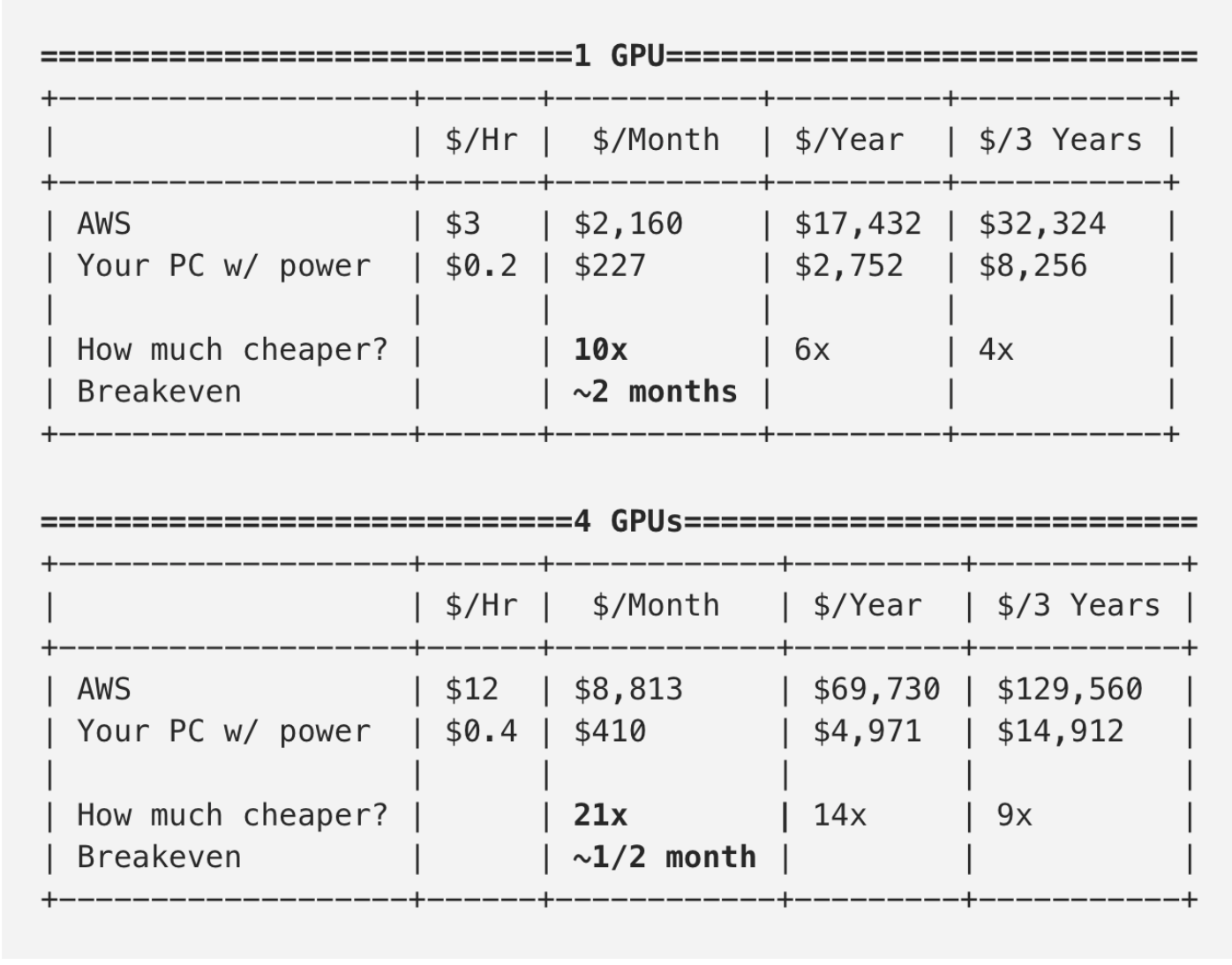

Assuming a computer with 1 GPU geared for deep learning depreciates to $0 in 3 years, the chart below shows that if you use it for up to 1 year it’ll be 10 times cheaper than Amazon web services and EC2 (including electricity costs).

However, there are a few drawbacks with a local deep learning workstation, such as slower download speed, the need for static IP for access outside the home, and you might consider updating your GPUs every so often but the money you will save is so great it’s worth it.

Amazon also provides a discount to people who take on contracts that last for several years, with a 35% and 60% discount for one year and three-year leases. However, a long-term contract can be a huge ongoing financial commitment.

If you do plan to shed money for a multi-year contract then you should stop and seriously consider buying your own machine for deep learning. Buying your own machine you will be able to customize it to your needs and also save a huge amount of money. See how much you can save with your own deep learning pc:

A build using 1 GPU is up to 10 times cheaper and those with 4 GPUs are up to 21 times cheaper within 1 year compared to web-based services. Assuming power consumption is at $0.20 per kWh with a 1 GPU machine using 1 kW per hour and a machine with 4 GPUs using 2 kW per hour. Depreciation is estimated as linear with full depletion in 3 years.

At $3 per hour and the fact you have to pay even when you’re not using the machine, it’s no wonder that cloud-based services cost more. For around $2100 a month to use Amazon Web Services, EC2, Google Cloud, or Microsoft Azure, the price is steep and unaffordable for most.

I previously used cloud services for a project and even with careful monitoring and switching off the machine I was faced with a whopping $1,000 bill. I’ve also tried Google Cloud and faced an outstanding bill of $1, 800, which is over a third of the price it cost of buying my own machine.

Even when you shut your machine down you still have to pay for storage at $0.10 per GB per month. This means you get charged hundreds of dollars on a monthly basis just to store data.

With regular use of a computer costing $3k with 1 GPU at 1 kilowatt per hour (kWh), you will break even in only 2 months. You will also own your computer. Your workstation won’t depreciate much in 2 months and if you use a 4 GPU version using 2 kilowatts per hour you’ll break even in less than 1 month, on the assumption that power costs $0.20 per kWh.

Performance is important and a BIZON prebuilt workstation can easily perform just as well as anything that Amazon’s Web Services currently offer.

For example, an Nvidia 1080 Ti performs at up to 70-90% speed compared to the cloud Nvidia V100 GPU using next gen Volta tech. This is because Cloud GPUs suffer from slow input/output (IO) between the instance and the GPU. Even though the V100 may be twice as fast in theory, in practice IO slows it down. However, if you’re using an M.2 SSD, IO will have super speed on your local computer.

The V100 and 16GB gives you more memory compared to 11GB, but if you make your batch sizes a little smaller and models more efficient you’ll be fine with 11 GB.

Compared to renting a last generation Nvidia K80 online at $1 an hour, a 1080 Ti has awesome performance capacity and is 4X times faster in training speed, which I found out using such benchmarks as Yusaku Sako (https://github.com/u39kun/deep-learning-benchmark). However, the K80 has 12GB per GPU, which has a small advantage over the 11GB 1080 Ti.

If you’re wondering why Amazon is so expensive, it’s because they’re forced to use an expensive GPU. Data centers don’t use the Geforce 1080 Ti as Nvidia prohibits them using both GeForce and Titan cards. This is why Amazon and other providers are forced to use the $8,500 datacenter version and charge a lot to rent it.

All of our BIZON deep learning workstations are optimized for performance and designed to allow for up to 4 GPUs. CPU have 36+ PCIe lanes, the motherboard have 4 GPUs compatibility, the power supply is 1600w and the CPU is more than 8 cores.

All of our products lineups use a stylish case made with aluminum and glass panels. With a personalized order process, you can choose any options you want.

We ensure that our computers runs quietly by using water cooling. Additionally, we also ensure that the parts make sense for machine learning, for example, the SATA3 SSD is 600MB per seconds while M.2 PCIe SSD is a whopping 5 times faster at 3.4GB per second.

How many GPUs should I use?

If you’re not sure how much GPU power you require, build your computer with 2 GPU BIZON G3000 and you can add 2 more at a later date.

Why 4 GPUs?

With 4 GPUs you can reduce the overall costs over a specific period of time, as discussed in this article. Multiple GPUs can also increase performance and can provide better value for money.

What models can I train?

You can train any model provided you have data. GPUs are most useful for deep neural nets such as CNNs, RNNs, LSTMs, and GANs.

How does my computer compare to Nvidia’s $49,000 Supercomputer?

Nvidia’s Personal AI Supercomputer uses 4 GPUs (Tesla V100), a 20 core CPU, and 128GB of RAM. I don’t have one so don’t know for sure, but the latest benchmarks show 25–80% speed improvement.

Nvidia state it’s 4 times faster but their benchmark probably uses the V100’s unique advantages, such as half-precision, that won’t materialize in practice. Remember your own machine will cost around $5k to buy using 4 GPUs so in comparison a $49,000 machine is excessive and probably not necessary unless you have money to burn.

References

If you’re interested in reading more, check out the following articles, posts, and blogs by:

- 1x GPU Bizon V3000: More details

- 2x–4x GPU Bizon G3000 Deep learning workstation: More details

- Michael Reibel Boesen’s post

- Gokkulnath T S’s post

- Yusaku Sako’s post

- Tim Dettmer’s blog

- Vincent Chu’s post

- Tom’s Hardware’s article