Table of Contents

- Introduction

- Benefits of Using Open-Source LLMs

- Best Open-Source LLM Variants for 2025

- Fine-Tuning LLMs

- How to Run Open Source LLMs Locally

- Testing Open Source LLMs Online: A Guide to LLM Playgrounds

- Best Practices

- Recommended Hardware (GPUs) for Running LLM Locally

- BIZON Servers and Workstations Optimized for LLMs

- Conclusion

Best GPU for LLM Inference and Training in 2025 [Updated]

In the rapidly evolving landscape of artificial intelligence (AI) and machine learning (ML), Large Language Models (LLMs) stand out as one of the most exciting and transformative technologies. These models have the ability to understand, generate, and interpret human language with an astonishing level of nuance and precision, making them invaluable for a wide range of applications, from natural language processing and automated content creation to sophisticated data analysis and interpretation. However, harnessing the full power of LLMs requires not just advanced algorithms and software but also robust and powerful hardware, particularly Graphics Processing Units (GPUs).

This article delves into the heart of this synergy between software and hardware, exploring the best GPUs for both the inference and training phases of LLMs. We'll discuss the most popular open-source LLMs, the recommended GPUs/hardware for training and inference, and provide insights on how to run LLMs locally.

Additionally, we will explore the advantages of BIZON servers and workstations, specifically optimized for running LLMs locally, and provide recommendations on the GPUs required to harness the full potential of these models.

Benefits of Using Open-Source LLMs

- Cost-Effectiveness: Open-source models reduce the financial barriers to entry for experimenting with and deploying AI technologies.

- Customizability: Users can fine-tune and adapt these models to their specific needs, enhancing their applicability across diverse domains.

- Community Support: Open-source projects benefit from the collective expertise of a global community, leading to rapid iterations and improvements.

- Transparency and Trust: Access to the model's architecture and training data fosters transparency, allowing users to understand and trust the model's outputs.

Best Open-Source LLM Variants for 2025

LLaMA 2

- Developer: Meta AI

- Parameters: Variants ranging from 7B to 70B parameters

- Pretrained on: A diverse dataset compiled from multiple sources, focusing on quality and variety

- Fine-Tuning: Supports fine-tuning on specific datasets for enhanced performance in niche tasks

- License Type: Open-source with restrictions on commercial use

- Features: High efficiency and scalability, making it suitable for a wide range of applications

-

GPU Requirements: For training, the 7B variant requires at least 24GB of VRAM, while the 65B variant necessitates a multi-GPU configuration with each GPU having 160GB VRAM or more, such as 2x-4x NVIDIA's A100 or NVIDIA H100. For inference, the 7B model can be run on a GPU with 16GB VRAM, but larger models benefit from 24GB VRAM or more, making the NVIDIA RTX 4090 a suitable option.

Bloom

- Developer: Hugging Face and collaborators

- Parameters: 176 billion

- Pretrained on: A curated dataset from a wide array of sources emphasizing ethical considerations

- Fine-Tuning: Highly adaptable to fine-tuning for specific applications

- License Type: Open-source, with a focus on ethical AI development

- Features: Designed for multilingual capabilities and ethical AI use

-

GPU Requirements: Training Bloom demands a multi-GPU setup with each GPU having at least 40GB of VRAM, such as NVIDIA's A100 or H100. For inference, GPUs like the NVIDIA RTX 6000 Ada with 48GB of VRAM are recommended to manage its extensive model size efficiently.

BERT

- Developer: Google AI

- Parameters: 110 million to 340 million, depending on the variant

- Pretrained on: English Wikipedia and BooksCorpus

- Fine-Tuning: Extensively used for fine-tuning in various NLP tasks

- License Type: Open-source under Apache 2.0

- Features: Revolutionized NLP applications with its context-aware representations

-

GPU Requirements: BERT's variants can be efficiently trained and run for inference on GPUs with 8GB to 16GB of VRAM, such as the NVIDIA RTX 3080 or 3090, making it accessible for most research and development projects.

Falcon

- Developer: Independent / Community-driven

- Parameters: Varies, typically in the tens of billions

- Pretrained on: A mixture of public domain texts and curated datasets

- Fine-Tuning: Supports fine-tuning, with tools and documentation provided by the community

- License Type: Varied, with most adopting permissive open-source licenses

- Features: Focus on lightweight, efficient models for faster inference

-

GPU Requirements: Depending on the specific Falcon model variant, GPUs with at least 12GB to 24GB of VRAM are recommended. For smaller models, a single high-end GPU like the RTX 4080 can suffice, whereas larger variants may require more robust solutions such as the RTX 4090 or RTX 6000 Ada for optimal performance.

Zephyr

- Developer: A collaboration among academic institutions

- Parameters: ~7 billion

- Pretrained on: A balanced dataset aiming for breadth and depth in topics

- Fine-Tuning: Encourages fine-tuning with academic and research purposes in mind

- License Type: Open-source, with a focus on research use

- Features: Aims at high efficiency and low resource consumption

-

GPU Requirements: Zephyr's efficiency allows it to be run on GPUs with as little as 16GB of VRAM for basic tasks. For more intensive tasks or larger datasets, GPUs with at least 24GB of VRAM, such as the NVIDIA RTX 4090, are recommended to ensure smooth operation.

Mistral 7B

- Developer: OpenAI-inspired, community-driven

- Parameters: 7 billion

- Pretrained on: Diverse internet-based dataset

- Fine-Tuning: Suitable for fine-tuning on specific tasks or datasets

- License Type: Open-source, with an emphasis on accessibility

- Features: Balance between size, performance, and computational requirements

-

GPU Requirements: Mistral 7B can be trained on GPUs with at least 24GB of VRAM, making the RTX 6000 Ada or A100 suitable options for training. For inference, GPUs with at least 16GB of VRAM, such as the RTX 4090, offer adequate performance.

Phi 2

- Developer: An academic collaboration

- Parameters: Ranges from small to large models

- Pretrained on: Ethically sourced and diverse datasets

- Fine-Tuning: Highly adaptable for fine-tuning

- License Type: Open-source, with a commitment to ethical AI development

- Features: Focuses on ethical considerations and bias mitigation

-

GPU Requirements: The VRAM requirement for Phi 2 varies widely depending on the model size. Small to medium models can run on 12GB to 24GB VRAM GPUs like the RTX 4080 or 4090. Larger models require more substantial VRAM capacities, and RTX 6000 Ada or A100 is recommended for training and inference.

MPT 7B

- Developer: A coalition of tech companies and academic partners

- Parameters: 7 billion

- Pretrained on: Extensively diverse datasets to ensure broad knowledge coverage

- Fine-Tuning: Designed for easy fine-tuning across various domains

- License Type: Open-source, encouraging widespread use and innovation

- Features: Combines performance with efficiency, making it suitable for multiple applications

-

GPU Requirements: MPT 7B is optimized for efficiency, requiring GPUs with at least 16GB of VRAM for effective training and inference, such as the RTX 4080 or 4090. For more demanding applications, GPUs with higher VRAM, like the RTX 6000 Ada, provide additional flexibility and performance.

Alpaca

- Developer: OpenAI and partners

- Parameters: Varies, with a focus on smaller, more efficient models

- Pretrained on: Custom datasets aimed at minimizing biases and enhancing reliability

- Fine-Tuning: Supports fine-tuning, with an emphasis on ease of use

- License Type: Open-source, designed for broad adoption and ethical use

- Features: Aims at high efficiency and ease of integration into applications

-

GPU Requirements: Alpaca's smaller and more efficient models can be run on GPUs with as little as 8GB VRAM, making it accessible for entry-level hardware. For optimal performance, especially when fine-tuning on larger datasets, GPUs with 16GB VRAM or more, such as the RTX 4080 or 4090, are recommended.

Fine-Tuning LLMs

Fine-tuning LLMs allows developers and researchers to leverage the vast knowledge encapsulated within these models, tailoring them to specific needs. The process can significantly improve performance on tasks such as text classification, question answering, and content generation. Below are detailed insights and resources to guide you through fine-tuning LLMs effectively.

-

Understanding the Basics

- Overview: Begin with a solid understanding of what fine-tuning entails. It's not just about training a model on new data but adjusting pre-trained models to align closely with your specific use cases.

- Resource: Hugging Face’s Fine-tuning Guide

-

Choosing the Right Model

- Overview: Select a model that closely aligns with your objectives. Models like BERT, GPT-3, or T5 have different strengths, and choosing the right base model is crucial for effective fine-tuning.

- Resource: Hugging Face Model Cards for detailed comparisons and capabilities of various models.

-

Preparing Your Dataset

- Overview: The quality and relevance of your dataset significantly impact the fine-tuning process. Ensure your data is clean, well-labeled, and representative of the task at hand.

- Tools: TensorFlow Datasets and PyTorch Data Utilities for data preparation and loading.

-

Fine-Tuning Strategies

- Overview: Effective fine-tuning strategies include gradual unfreezing, discriminative learning rates, and employing regularization techniques to avoid overfitting.

- Guide: "Universal Language Model Fine-tuning for Text Classification" for insights into advanced fine-tuning strategies.

-

Utilizing Fine-Tuning Platforms

- Overview: Platforms like Google Colab offer an accessible environment with computational resources for fine-tuning models without significant hardware investment.

- Resource: Google Colab for free access to GPUs and TPUs for training.

-

Monitoring and Evaluation

- Overview: Continuous monitoring and evaluation are crucial. Use metrics relevant to your task to assess the model's performance and make iterative improvements.

- Tools: Weights & Biases for tracking experiments, visualizing changes, and optimizing your models.

How to Run Open Source LLMs Locally

Running open-source LLMs locally involves several steps, including setting up the appropriate hardware environment, installing necessary software dependencies, and configuring the model parameters. The process typically involves:

- Hardware Setup: Ensuring your system meets the GPU VRAM requirements of the chosen LLM.

- Software Installation: Installing programming environments such as Python, along with libraries like TensorFlow or PyTorch, and the specific LLM package.

- Model Configuration: Loading the pre-trained model and configuring it for your specific use case, including fine-tuning if necessary.

Contact BIZON engineers if you have any questions about configuring your next workstation for LLMs. Explore our workstations and NVIDIA GPU servers. lineup optimized for running LLMs.

Testing Open Source LLMs Online: A Guide to LLM Playgrounds

Exploring and testing Large Language Models (LLMs) has become more accessible than ever before, thanks to a variety of online platforms designed specifically for interactive experimentation with these advanced AI systems. These "LLM Playgrounds" offer users the ability to engage directly with various models, providing hands-on experience without the need for local hardware setups or extensive computational resources. Below, we delve into some of the most prominent platforms where you can test open-source LLMs online, complete with direct links to their playgrounds.

-

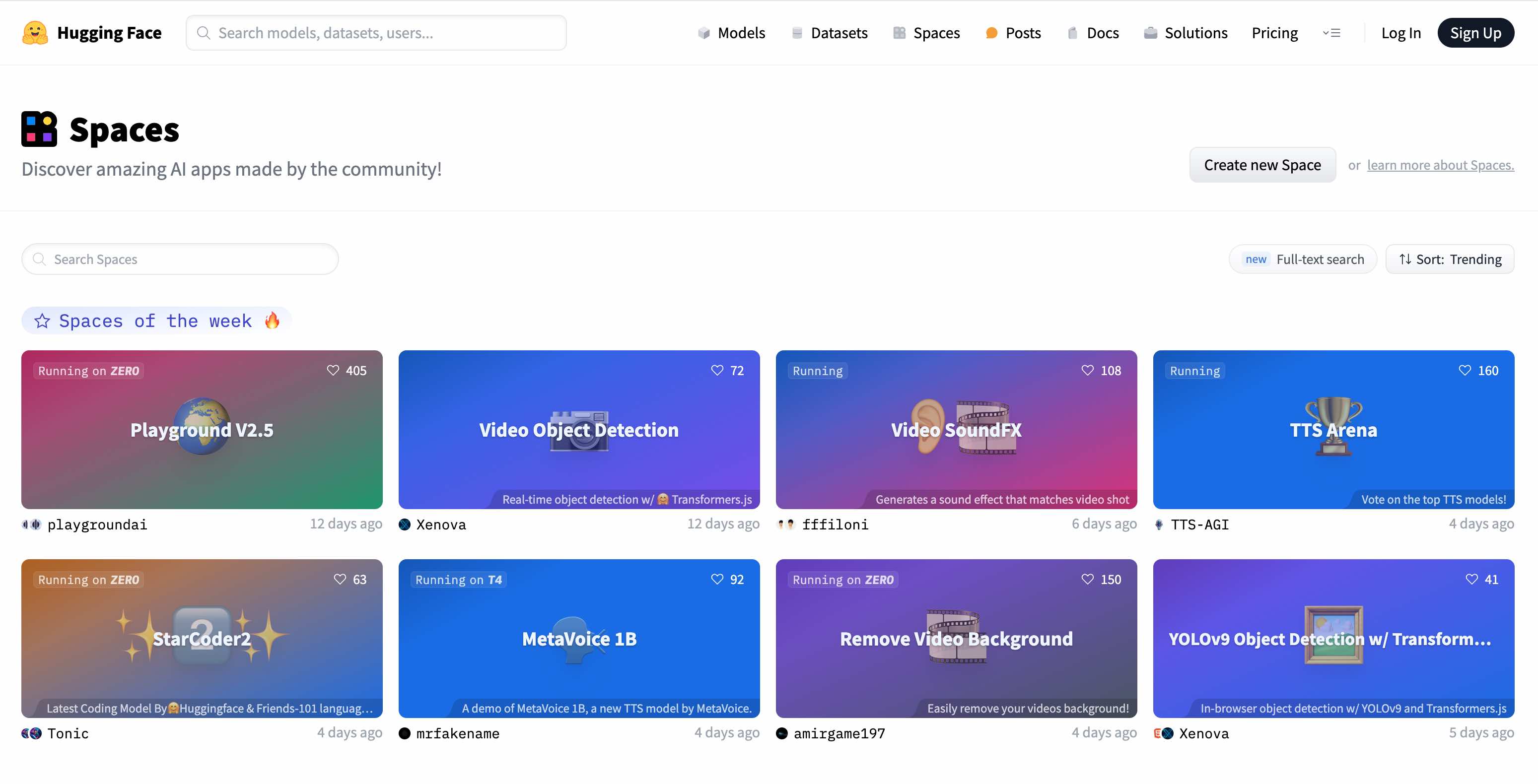

Hugging Face Spaces

- Overview: A collaborative platform that enables users to explore and share interactive machine learning projects. It features a wide variety of LLMs, including the latest models for NLP tasks, providing an ideal space for experimentation and discovery.

- URL: https://huggingface.co/spaces

-

GPT4ALL

- Overview: A platform dedicated to making GPT models more accessible to the public. It allows users to interact with various versions of GPT models, offering insights into their generative capabilities and performance across different tasks.

- URL: https://gpt4all.io

-

OpenAI’s Codex

- Overview: Designed by OpenAI, Codex excels at understanding and generating human-like code, making it a powerful tool for developers. Its playground provides an interactive environment to explore Codex’s capabilities, from code generation to problem-solving.

- URL: https://openai.com/codex

-

Talk to Transformer’s GPT-3 Playground

- Overview: An accessible interface for interacting with GPT-3, allowing users to input text prompts and receive generated text responses. It showcases the model's ability to continue text, answer questions, and more, making AI experimentation fun and straightforward.

- URL: https://talktotransformer.com

-

Google Colab

- Overview: Google Colab provides a free, cloud-based Jupyter notebook environment that includes free access to GPUs and TPUs. It's an excellent resource for running and testing LLMs, with extensive support for various machine learning libraries and frameworks.

- URL: https://colab.research.google.com/

These LLM playgrounds represent the forefront of interactive AI exploration, offering anyone with internet access the ability to experiment with advanced language models. Whether for educational purposes, research, or simple curiosity, these platforms demystify AI technologies, making them more approachable and understandable.

Choosing the Right Open-Source LLM for Your Needs

Selecting the right LLM depends on several factors, including:

- Task Complexity: More complex tasks may require models with a higher number of parameters.

- Resource Availability: Larger models require more powerful hardware and longer training times.

- Specific Requirements: Certain models may be better suited for specific languages, domains, or ethical considerations.

Best Practices

- Data Privacy: Ensure that the use of LLMs complies with data privacy regulations and ethical guidelines.

- Resource Management: Optimize computational resources to balance between model performance and operational costs.

- Continuous Learning: Stay updated with the latest developments in LLMs to leverage improvements and updates.

Recommended Hardware (GPUs) for Running LLM Locally

Choosing the right GPU is crucial for efficiently running Large Language Models (LLMs) locally. The computational demands and VRAM requirements vary significantly across different models. Below, we explore the latest NVIDIA GPUs and their suitability for running various LLMs, considering their VRAM and performance capabilities.

-

NVIDIA RTX 4090: With 24GB of GDDR6X VRAM, the RTX 4090 is a powerhouse for running medium to large LLMs. Its advanced architecture provides significant speed improvements, making it suitable for models up to the size of LLaMA 2 or Bloom's smaller variants. The RTX 4090's tensor cores and AI acceleration features enable faster fine-tuning and inference, making it a versatile choice for researchers and developers.

-

NVIDIA RTX 6000 Ada: Designed for professional and industrial applications, the RTX 6000 Ada comes with 48GB of GDDR6 VRAM, offering ample capacity for even the largest LLMs like Bloom. Its robust computational capabilities are ideal for extensive model training sessions and complex simulations, providing both performance and reliability for critical tasks.

-

NVIDIA A100: The A100, with options for 40GB or 80GB of HBM2 VRAM, is tailored for data centers and high-performance computing tasks. Its massive memory bandwidth and parallel processing capabilities make it an excellent choice for training and running the most demanding LLMs, including those with billions of parameters. The A100's support for multi-instance GPU (MIG) also allows for efficient resource allocation, enabling multiple tasks to run concurrently without performance degradation.

-

NVIDIA H100: The latest in NVIDIA's HPC lineup, the H100, offers 80GB of HBM3 VRAM, representing the pinnacle of GPU technology. It is engineered for AI workloads, with features like the Transformer Engine designed specifically to accelerate tasks such as training large language models. The H100's unparalleled computational throughput and efficiency make it the ultimate choice for cutting-edge AI research and applications that require the processing of vast datasets and complex model architectures.

When selecting a GPU for running LLMs locally, consider the specific model's VRAM and computational requirements. Smaller models can run effectively on GPUs with less VRAM, such as the RTX 4090, whereas larger models like Bloom or GPT variants might require the high-end capacities of the RTX 6000 Ada, A100, or H100. It's also essential to balance the GPU's cost against the intended use case, whether for development, research, or production environments.

BIZON Servers and Workstations Optimized for LLMs

BIZON specializes in high-performance computing solutions tailored for AI and machine learning workloads.

If you have any questions about building your next high-performance, BIZON engineers are ready to help. Explore our workstations optimized for generative AI and NVIDIA GPU servers.

- High-Performance Hardware: Equipped with the latest GPUs and CPUs to handle large models and datasets efficiently.

- Scalability: Designed to scale up, allowing additional GPUs to be added as computational needs grow.

- Reliability: Built with enterprise-grade components for 24/7 operation, ensuring consistent performance.

- Support: Comprehensive support and maintenance services to assist with setup, optimization, and troubleshooting.

Conclusion

Selecting the right GPU for LLM inference and training is a critical decision that can significantly influence the efficiency, cost, and success of AI projects. Through this article, we have explored the landscape of GPUs and hardware that are best suited for the demands of LLMs, highlighting how technological advancements have paved the way for more accessible and powerful AI research and development. Whether you're a seasoned AI researcher, a developer looking to integrate LLM capabilities into your projects, or an organization aiming to leverage the latest in AI and deep learning, understanding the hardware requirements and options is essential.

BIZON GPU servers and AI-ready workstations emerge as formidable choices for those seeking to dive deep into the world of AI, offering specialized solutions that cater to the intensive demands of LLM training and inference. As the field of AI continues to advance, staying informed about the best tools and technologies will remain paramount for anyone looking to push the boundaries of what's possible with Large Language Models.